Presenting CT-ICP, A Kitware Europe state-of-the art LiDAR-only Odometry and Mapping presented at ICRA 2022

We are pleased to present this new method for LiDAR-Only Odometry, developed by Kitware Europe, in collaboration with MINES Paris PSL. This work was presented at ICRA 2022 in Philadelphia, and was among the three finalists for the Oustanding Paper Award of the conference.

Context

Kitware Europe (KEU) has a long experience with LiDAR-based SLAM, and has been developing its own LiDAR SLAM, which is used in production environments by a multitude of clients, and is easily accessible either as part of LidarView or via the ROS environment.

However, many challenges remain in LiDAR SLAM, the large quantity of information to process, the real-time constraint, the motion distortion (or rolling shutter) due to the motion of a LiDAR scan during its acquisition, mobile objects introducing inlier points, etc …

To address these issues, KEU shared the supervision of the PhD thesis of Pierre Dellenbach with the robotics laboratory of MINES Paris PSL university.

It is in the context of this thesis that the article CT-ICP: Real-time Elastic LiDAR Odometry with Loop Closure was presented at ICRA 2022.

CT-ICP: What’s new in the method

In this work, we present a State-Of-The Art LiDAR-Only odometry, which runs on real time for Driving scenarios. As demonstrated in the paper.

Distinguishing this work from other, more standard approaches like LOAM [1], in CT-ICP each frame is registered against a dense point cloud (similarly to [2]). However, unlike [2] which wasn’t operating in real-time, in this work, the point cloud is stored with an efficient research structure, allowing fast neighborhood computation (we currently use a voxel hash map). This allows our method to run in real time.

Below, we see our method in action on a synthetic dataset generated with the CARLA simulator:

The main contribution of our paper is the compensation of the motion distortion during the acquisition of the Scan. 3D LiDAR typically acquire frames at a frequency of 10-20Hz. During the acquisition of the scan, however the sensor is typically moving, which can cause points in the scan to be distorted (on a highway, at 130km/h, this typically results in 1 to 2 meters of difference between a distorted point and the real point position).

Our work addresses this by proposing an elastic registration of each scan against our map. Instead of the 6 degrees of freedom, we give each scan 12 degrees of freedom, which allows the scan, during the registration, to distort itself onto the map, and results in a very precise insertion into the map.

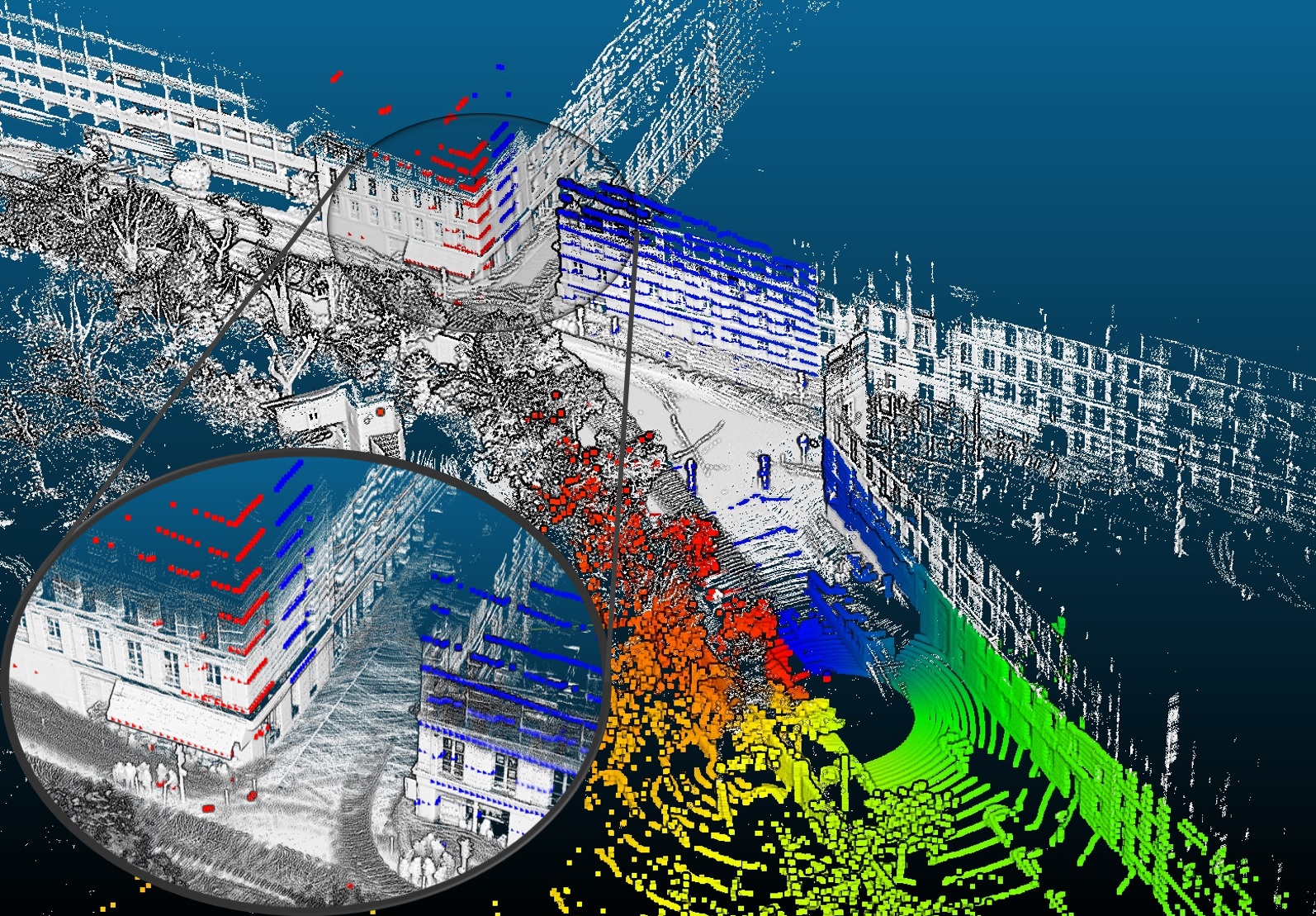

Below, we can see the distortion in action on the right hand side (the points in color are the points distorted, while the points in white are the original points):

Combining these two results yields a LiDAR-Only odometry which reaches states-of-the art results on the KITTI odometry benchmark [3].

The code is open source, with a permissible license, and available at https://github.com/jedeschaud/ct_icp .

While we are already continuously updating the quality, performance and accuracy of the code, there is more to come around the corner…

The Future of CT-ICP is Inertial

Though the focus of this work was LiDAR-Only odometry, sometimes a LiDAR is simply not good enough by itself. We participated at the HILTI Challenge at ICRA 2022, and despite our best efforts the dataset was too challenging for our LiDAR-Only solution.

The goal of this benchmark, however, was precisely to push mapping solutions to their limits, notably with high-frequency motions of their hand-held sensor acquisition setup, and to help push the accuracy of sensor-fusion algorithms (ie algorithms combining multiple types of sensors as input).

For the challenge, we enhanced our LiDAR-Only system by naively integrating IMU measurements, introducing a novel Inertial-Odometry method, and we performed honorably (reaching 7th place out of 40) despite our lack of experience in sensor fusion. Below we show a GIF of our LiDAR-Inertial SLAM in action in a high-frequency motion, which is typically very challenging for LiDAR-Only Odometry.

Our aim, for the future months, is to improve this early version, (close the gap with more refined Inertial-SLAM methods) and present our work in September for ICRA 2023. Stay tuned !

(As always all the code will made public and open-source)

Acknowledgments

First and foremost, Jean-Emmanuel Deschaud, principal collaborator of the paper and author of the original code base, and François Goulette, my PhD Supervisor for the invaluable feedback while constructing this project.

And on Kitware Europe’s side, thanks to @julia-sanchez for the fruitful exchanges, which helped us to improve the quality of the code, and learn the lessons from Kitware Europe’s great experience with LiDAR-SLAM in production environment.

References

- [1] J. Zhang and S. Singh, “Low-drift and real-time lidar odometry and mapping,” Auton. Robots, vol. 41, no. 2, p. 401–416, Feb. 2017. [Online]. Available: https://doi.org/10.1007/s10514-016-9548-2

- [2] J.-E. Deschaud, “Imls-slam: Scan-to-model matching based on 3d data,” in 2018 IEEE International Conference on Robotics and Automation (ICRA), 2018, pp. 2480–2485

- [3] A. Geiger, P. Lenz, and R. Urtasun, “Are we ready for autonomous driving? the kitti vision benchmark suite,” in 2012 IEEE Conference