ParaView Cinema: An Image-Based Approach to Extreme-Scale Data Analysis

The supercomputing community is moving toward extreme scale, which changes how we analyze simulation results. While large-scale visualization typically tends to be less interactive, we have developed an image-based approach to extreme-scale data analysis, ParaView Cinema, that enables interactive exploration and metadata browsing.

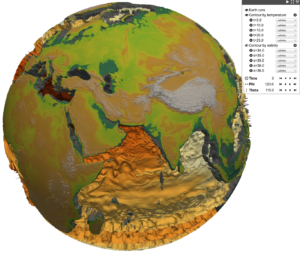

Cinema is an open-source, novel framework built on top of ParaView that couples processing exporters and a client user interface for output analysis using images or other types of reduced data. Cinema captures images based on several camera positions and filter configurations.

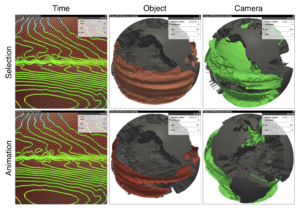

With Cinema, data processing and visualization occur in-situ or in batch mode to produce a set of pre-computed images or reduced data. Those will then be used later on to interactively analyze the simulation results. Unlike traditional in situ approaches, Cinema focuses on gathering a larger set of images (including camera positions, operations, and parameters to operations) to produce explorable results. Thanks to the lightweight nature of the data generated using cinema, the analysis and exploration of those results can be done using standard Web technologies.

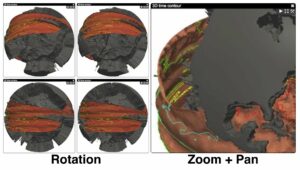

Cinema’s interface for interactive exploration mimics standard mouse interaction for 3D data such as rotation, panning, and zooming.

The difference is that Cinema relies on pre-rendered images to emulate interactive navigation around an object. This means that, when using the navigation, it will seem as if you are interactively rendering images from a 3D model. In reality, Cinema will just browse through its existing set of pre-computed images like Google Street view.

As for future developments, we are currently working on improving the graphical web client with a more refined and solid user experience.

This work with the Los Alamos National Laboratory led us to a paper at SuperComputing 2014 named “An Image-based Approach to Extreme Scale In Situ Visualization and Analysis,” which will be presented by James Ahrens at the conference in New Orleans on November 19th at 11:30AM in rooms 391-92.