ParaView and Project Tango: Loading Data

Editors Note: In 2017/2018 Google shut down Project Tango in favor of ARCore. While ParaView is under active development, and Kitware still does a lot of work with point clouds, we no longer have working versions of the Project Tango device APKs referenced in this post.

Introduction

We use ParaView for many of our our point cloud projects: object detection, mobile LiDAR, advanced visualization, and more. ParaView provides a platform for interactive visualization and processing pipelines with python scriptability. Last year we wrote a blog post describing how to load point cloud data into ParaView from the first generation Project Tango development kit. In this article we describe an updated method that works with the current development kit from Google. We are just getting started using the device and SDK and wanted to share our initial experiences and results.

Using the new device, we have begun mapping and analyzing our 3D environment. The video below shows the recorded information from a walk around our office. This video uses the eye-dome lighting feature of ParaView to visualize the point cloud over the whole sequence. The individual point cloud captured at each acquisition is highlighted in red, and the path of the tablet is shown in yellow.

Installing the recorder app

We used the Point Cloud Java example from Project Tango Java API Example Projects as the basis for our recording tool. We modified the application to record device pose and point cloud data from the Project Tango API. This data is saved to VTK files which can be directly loaded into ParaView. The source code for this application is available here under the Apache 2.0 license. Improvements or fixes to this app are welcome as pull requests. A binary download of the .apk is available here (updated, no longer available, see editor’s not at top of page). The current version is built against the Fermat release of the Project Tango SDK.

Using the recorder app

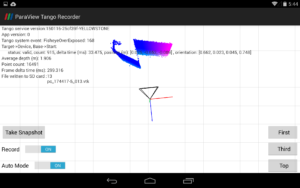

To start recording, open the ParaView Tango Recorder on the device. After starting the app, hold the device still for several seconds while the device initializes itself. You can start using the app when you see a point cloud appear. The app listens to callbacks from the Project Tango SDK for updates of the device pose and for new point cloud data. Recorded data is written to the sdcard storage.

The app can record two different types of data:

- Point Cloud – the 3D coordinates of each point in the pointcloud along with a timestamp. Currently we record a subset of the available point cloud data (every third frame of the point cloud data) to reduce overall storage requirements.

- Device Pose – The 3D position, orientation, and timestamp of the device position are recorded separately.

To start recording the user should toggle on the Record switch; this will begin recording the pose information only. Point cloud data can be acquired either by tapping the Snapshot button to record a single point cloud or by enabling Auto Mode which will automatically record every third point cloud that becomes available. The First, Third, and Top buttons control the camera viewpoint of the live point cloud stream showing first person, third-person, and top-down views. If difficulties are encountered in viewing the point cloud data try restarting the app and review the troubleshooting procedures.

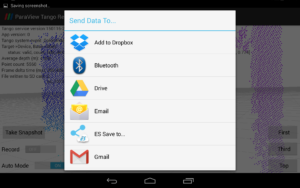

When recording is stopped by switching off the Record button, a dialog will appear to share the scan data compressed as a .zip, to another application. You can then save the scan to another application such as Google Drive, Email, etc.

There will be a delay before this dialog appears, so be patient; longer scans will require more time to launch the Send Data To… dialog.

The data is also recorded on the SD card, in the folder /sdcard/Tango/MyPointCloudData. There is no feature within the current app to clear that data from disk. If you want to free disk space you can use a file manager app or adb to delete the files manually.

Loading data in ParaView

The ParaView Tango Recorder app uses the VTK file format to store pose and point cloud information stored in a .zip file. Sample data is available here. After the .zip file is extracted, the contents may be opened in ParaView. For this tutorial we used ParaView 4.3.1. After opening ParaView, use File > Open… to load the pose and point cloud data. The point cloud files should appear as a single collapsed entry. If you select and load this entry, without uncollapsing the filenames, the data will load as a point cloud animation sequence. After loading, we toggle off the visibility of the scan data to show only the file pc_14143861_poses.vtk. This shows the XYZ position of the device during recording. In this case it traces a path through our office. The orientation and time information are stored as point data within the object.

Second we can view and animate the point cloud data. The Animation toolbar at the top enables sequential playback of the point cloud data. In the figure below, we see a 3D scan of a person wearing boxing gloves from the perspective of the depth camera.

In a followup post we will show how to align the depth information with the device pose, visualize the orientation of the device as glyphs, overlay multiple depth scans into a single scene, and other techniques needed to create visualizations like the video at the top of the blog post.

Links

- ParaView: http://www.paraview.org/downlo

ad/ - Recording APK: http://midas3.kitware.com/midas/item/316868 (updated)

- Source code for recorder: https://github.com/Kitware/ParaViewTangoRecorder

- Example data: http://midas3.kitware.com/midas/item/316864

Please could you clarify?

“If you select and load this entry, without uncollapsing the filenames, the data will load as a point cloud animation sequence. ”

We should load collapsed our uncollapsed?

I would recommend loading them collapsed so they appear as an animation sequence.

Thanks I have seeing your post in project tango community, about the need to apply a filter to transform the depth data to world coordinates.

You are making an excellent work. I would like to contact your group in case you would want to collaborate/work with our company, in Spain and Middle East in some business, where we are going to use scanner technology.

This is my mail:

ads@ceg-ksa.com

This. Is awesome! Just got it working with Android Studio today and viewing, really looking forward to the next post.

Great article, I’m very interested in the follow up articles you mention. I want to align the depth information with the pose and overlay multiple depth scans into a single scene. When do you expect to post that, and are there other resources available on how to do so in the meantime? Thank you!

Im with Brett. I work in Architecture firm and this idea could work wonders seeing how point cloud data can be used in programs like Revit. I am super excited to see how to recreate the depth information align with pose data.

Thank you again!

hi..i liked your post very much..when is the followup post describing how to make video like above coming?

Hi

I would like to know if it is possible to record the data and use it like live streaming. I mean record the data, use it without saving as ZIP file and use it via bluetooth with another device.

Great article! I was wondering when the followup post will be available.

Hey there,

is it possible to provide a new link for the binary download as the current is dead?

Jan. St.,

In 2017/2018 Google shut down Project Tango in favor of ARCore. While ParaView is under active development, and Kitware still does a lot of work with point clouds, we no longer have working versions of the Project Tango device APKs referenced in this post.

I have added this as a note to the top of the post.

Best,

Brad

Brad,

I’m aware of that, but I am doing some investigations in depth cameras and have a Lenovo Phab 2 Pro lying on my table. I would appreciate to get a working binary, as the source code couldn’t be used out of the box, while cloning it directly from Android Studio. I just want to know if it is worth it or develop all from the start on my own.

Regards,

Jan.