ParaLabel: A ParaView plugin for 3D object detection annotation at scale

Accurate 3D object detection is an important computer vision task for various domains such as remote sensing, medical or autonomous driving. It usually requires annotating the dataset with precise labels to enable robust model learning. However, annotating large datasets at scale with precise labels is time-consuming. To address this, we present ParaLabel, a novel plugin for ParaView.

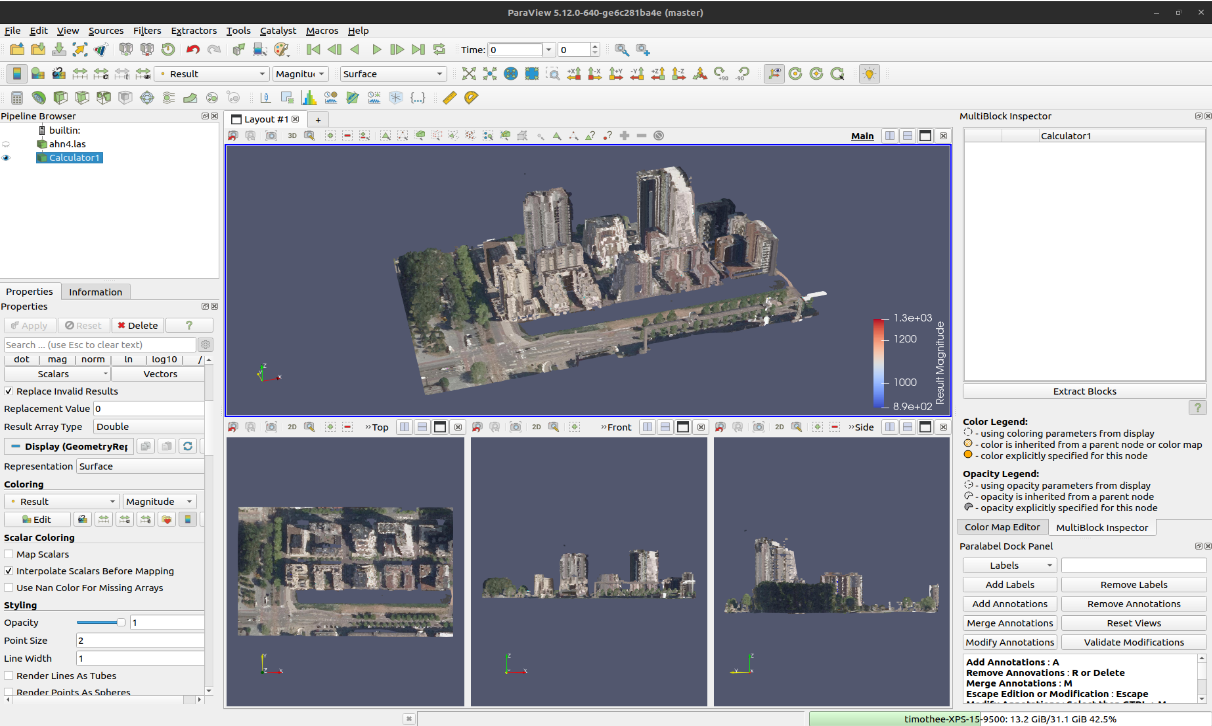

In this blog post, we’ll delve into the initial developments of ParaLabel, highlighting its integration with ParaView and VTK. We will also showcase an annotation workflow on a popular large-scale dataset, the dutch remote sensing dataset AHN4.

Existing 3D annotation platforms

An annotation platform is a software that is designed to label data. In the context of machine learning, users add relevant information or labels to their datasets, which is necessary for training AI models. Examples of such information are:

- Metadata: additional details about the data itself (label)

- Annotations: detailed markings on pieces of data (bounding boxes, points)

For instance, if you want to train a model to detect vehicles in a video, you would first need to create a dataset of labelled images pointing at the location of the vehicle in each of them. Similarly for a 3D object detection task you would need to create 3D bounding box labels around the objects of interest.

In the case of remote sensing, the user usually loads a tile of the data representing a small area. This is the use-case we illustrate with the below figures.

Several software exists that supports this type of annotation including: CVAT, Supervisely, Pointly, Segment.ai. Those are popular tools, but they lack some basic features usually not available for free (like camera clipping, or changing the points size), do not support desktop environments and large datasets. Motivated by those limitations, we decided to develop ParaLabel which features multiple benefits including:

- Fully open source: Built with a permissive Apache2 license for maximum flexibility.

- Scalable data handling: Inheriting Paraview’s capabilities in terms of data scalability on High-Performance Computing environments, making it possible to handle large and complex datasets.

- Flexible deployment options: Can be run locally (desktop) or remotely through a client-server approach.

Figure 1. (From left to right) CVAT, Segments.AI and Supervisely user interface.

Methods

ParaLabel includes multiple vtk modules: a reader/writer for the annotation file and an annotation manager. It also features a new UI for accurate annotations and user interaction with the scene.

Reader/writer and vtk data model

There are limited options available when it comes to 3D detection annotation formats: supervisely, amazon sagemaker are some examples. We opted for a variation of the popular KITTI3D benchmark open-source format, excluding the temporal information. This was driven by our desire to contribute to the research community. Relying on an external open-source format also helps us on maintenance and code inter-operability.

All annotation items (oriented bounding boxes) are stored in a single XML file, for fast serialization and easy scalability. Each annotation has the following fields:

- label: The category or label of the detected object.

- position: The translation of the cuboid in meters.

- rotationXYZ: Which corresponds to the euler angles converted to rotation matrices with XYZ convention.

- rotationAxisAngle: The rotation defined by its axis and the angle following vtk implementation.

- scale: The length of the cuboid in meters for each axis (x, y and z).

- uid: The unique identifier for this annotation (defined only in the application).

Our platform utilizes an efficient VTK dataset type that fits quite well with our use-case: the partition dataset collection. It is a re-design of the multiblock dataset, that allows to store and manage efficiently multiple items, specifically for data-distributed applications. The key improvement is to decompose the arrangement of data (vtkDataAssembly) with the actual data (vtkPartitionedDataSetCollection and vtkPartitionedDataSet). For more information, please refer to the following paraview discourse post.

In our application, a single annotation file is represented as one instance of vtkPartitionedDataSetCollection. Each element within it is a single vtkPartitionedDataSet that contains only one annotation, with vtkpolydata type.

Annotation manager

There are multiple operations that are necessary in an annotation platform: managing the different labels/categories, and the annotation themselves.

The category management is currently very minimalistic, we only update the content of a QComboBox. Our annotation management module is actually a collection of VTK filters that operates directly on the vtkPartitionedDataSetCollection with three basic operations:

- Addition (vtkAddAnnotationFilter): Add a new annotation item in the collection, by specifying its properties.

- Edition (vtkEditAnnotationFilter): Edit the properties from a selected annotation directly on the ParaView active render view (via a vtkBoxWidget).

- Removal (vtkRemoveAnnotationFilter): Delete the selected annotation from the collection.

Because those are built upon VTK, we inherit from the pipelining capabilities allowing for deferred computation and a history-like functionality.

Custom render view

To improve interaction with our 3D scene in ParaView, we developed a custom render view configuration. By adding one primary orthographic view that serves as the main user interface, accompanied by three additional views (top, front, and side), users can interact more intuitively with their annotated data.

Results

The ParaLabel project is available on gitlab at https://gitlab.kitware.com/keu-computervision/paralabel/paralabel. You can find the first linux release v0.1.0-alpha here. It is compatible only with ParaView 5.13.1-RC1 and has been tested with Ubuntu 20.04 LTS (Windows will come later).

The video that follows is an example of loading an annotation file and remotely rendering a lidar aerial dataset from IGN Lidar HD project. It has been tested on a server with an RTX A6000 to render 250M points (up-to 50GB RAM), with client interaction on a consumer-grade laptop (i7-11800H@ 2.30GHz × 16). The tiles were pre-processed and colorized using ign-pdal-tools, merged and exported to .vtu file format within ParaView.

Note

Manipulating even bigger data on such a powerful machine is actually limited by the OpenGL BackEnd which is used in VTK. We demonstrated in this blog how to manipulate bigger data using WebGUP API in this blog https://www.kitware.com/achieving-interactivity-with-a-point-cloud-of-2-billion-points-in-a-single-workstation/.

Conclusion

Throughout this post, we presented ParaLabel, a novel ParaView plugin for 3D object detection annotation at scale. There are a lot of future avenues for the project:

- AI annotation assistance, and more specifically the integration of Meta’s Segment Anything in VTK for 3D labeling.

- Explore other data models such as image data for more efficient storage and rendering.

- Annotation at larger scale, specifically for large point clouds thanks to the recent vtkhdf integration in ParaView.

- Other use-case, and especially segmentation for applications in tractography (d-MRI) or vascular imaging (CT) are our priority.

- Auto ML, with distributed training and/or active learning.

- Annotator workflow managements

We believe it can be a major tool in large 3D data annotation in many domains such as: remote sensing, medical imaging, seismology… Let us know in which domain you could use such a tool!

Don’t hesitate to reach out to us if you would like to contribute!

Acknowledgments

Thanks to our intern, Timothee Teyssier, who contributed to the development in 2024 during his 6 months internship.