Open Source and the Road to One Quintillion Double FLOPS

In high-performance computing (HPC), petaFLOP machines can perform a quadrillion floating point operations per second (FLOPS) [1]. While this may seem like a lot, complex code can require more computational capabilities. The Exascale Computing Project (ECP) of the Department of Energy (DOE) aims to address this need, as it seeks to create “a capable exascale computing ecosystem that delivers 50 times more computational science and data analytic application power than possible with DOE HPC systems such as Titan (ORNL) and Sequoia (LLNL)” [2]. At exascale, performance climbs to a quintillion double FLOPS. To achieve and effectively use exascale, software needs to run faster and leverage new hardware technologies.

Data analysis and visualization software in support of exascale computing is being developed through ECP by members of our HPC and visualization team, Sandia National Laboratories (SNL), Oak Ridge National Laboratory (ORNL), Los Alamos National Laboratory (LANL) and the University of Oregon (UO) [3]. This software is an open source toolkit called VTK-m. Not only is VTK-m geared toward writing algorithms that use data-parallel methods to run faster, but it is tailored to work with new pieces of hardware, including multi-core and many-cores systems [4].

Currently, the largest supercomputer is Summit, a 200-petaFLOP system at ORNL, which is one-fifth of the way to exascale [5]. Following close behind Summit is Sierra, a 125-petaFLOP system at LLNL [6]. VTK-m is compatible with Sierra and Summit’s hardware technologies, and it can expand to perform on future exascale systems.

With the capability to compute at exascale comes the question: What do we do with the results? As data gets bigger, it becomes more difficult to move. Plus, storage systems have generally been slow to improve. With trillions of data points and counting, it becomes crucial to analyze data at rest in system memory.

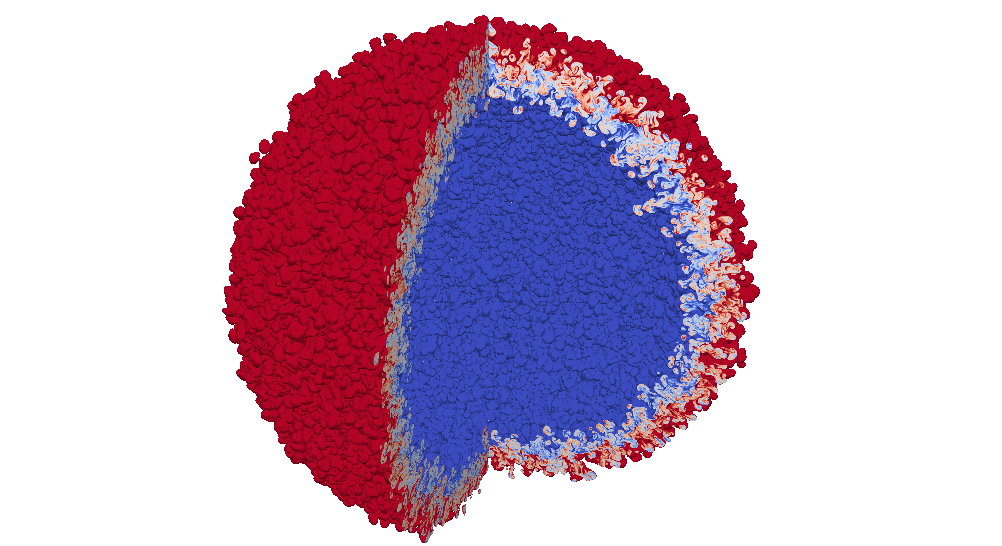

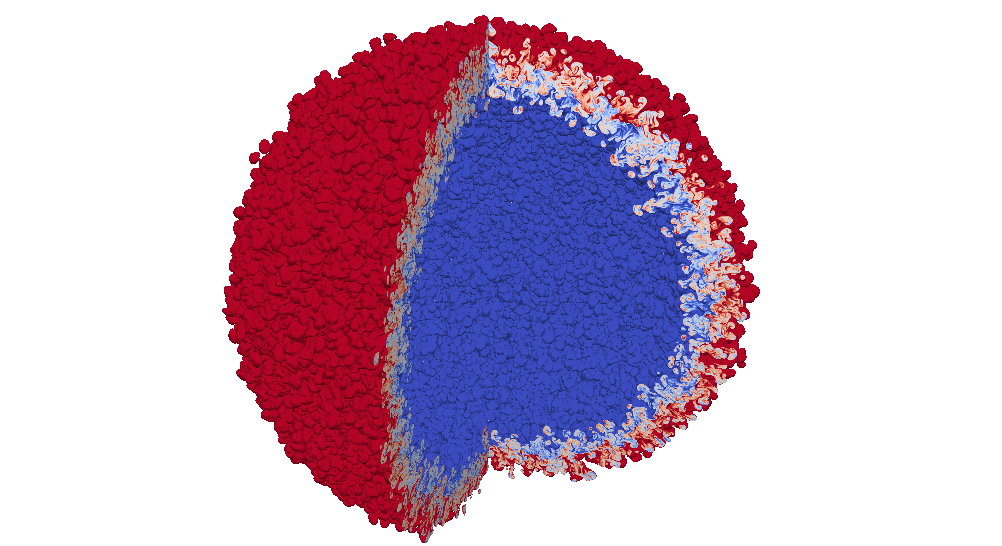

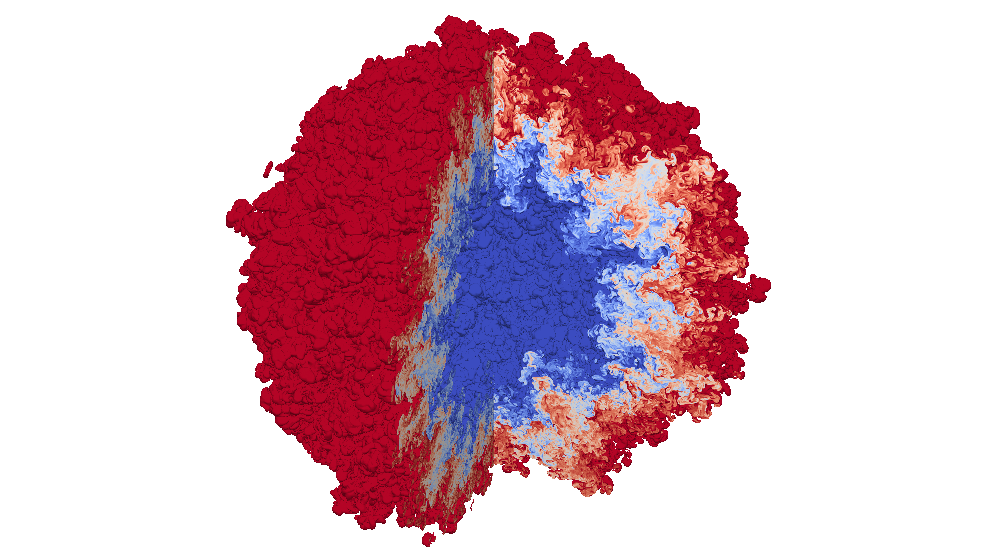

Exascale analysis is where ALPINE—another project under ECP—excels. The ALPINE software is being built by members of our HPC and visualization team, LANL, OU, Lawrence Berkeley National Laboratory (LBNL) and LLNL [7]. Fundamental to ALPINE is the development of analysis techniques at scale. These techniques can run on the same supercomputers that yield results. More specifically, many of the techniques analyze data in situ. This methodology allows ALPINE’s algorithms to keep data in system memory, while analysis runs on the fly. To achieve this feat, ALPINE utilizes three in situ libraries: ParaView Catalyst, VisIt LibSim and ALPINE Ascent [8].

ALPINE also uses algorithms that employ VTK-m. Like VTK-m, ALPINE’s software products are open source. ALPINE, however, is less focused on directly interacting with new hardware technologies. Instead, ALPINE’s work is aligned with exascale scientific applications such as atmospheric, combustion and fusion modeling. When coupled through ECP, ALPINE and VTK-m help tie new hardware technologies to software improvements to scientific applications.

Out of strategic pushes from the scientific community come innovations. With the push to explore space, for example, came LASIK and freeze-dried food [9]. Innovations can change industries, thereby affecting the economy. By continuing to devise new technologies, the U.S. not only remains a leader in computing, but it boosts the economy.

The International Conference for High-Performance Computing, Networking, Storage, and Analysis (SC) offers an excellent forum for scientific exploration through exascale efforts. We plan to discuss exascale computing and the role of open source software at our booth (3735) from November 12th to November 15th. Please join us in the conversation.

This research was supported by the Exascale Computing Project (17-SC-20-SC), a joint project of the U.S. Department of Energy’s Office of Science and National Nuclear Security Administration, responsible for delivering a capable exascale ecosystem, including software, applications, and hardware technology, to support the nation’s exascale computing imperative.

References

- National Nuclear Security Administration and U.S. Department of Energy Office of Science. “What is Exascale.” Exascale Computing Project. https://www.exascaleproject.org/what-is-exascale. Accessed November 5, 2018.

- National Nuclear Security Administration and U.S. Department of Energy Office of Science. “Fact Sheet.” Exascale Computing Project. https://www.exascaleproject.org/wp-content/uploads/2018/02/ECP-Factsheet-02-22-2018.pdf. Accessed October 19, 2018.

- National Nuclear Security Administration and U.S. Department of Energy Office of Science. “ECP/VTK-M.” Exascale Computing Project. https://www.exascaleproject.org/project/ecp-vtk-m. Accessed October 19, 2018.

- VTK-M. July 26, 2018. http://m.vtk.org/index.php/Main_Page.

- Oak Ridge National Laboratory. “Summit.” Oak Ridge National Laboratory Leadership Computing Facility. https://www.olcf.ornl.gov/summit. Accessed October 22, 2018.

- Lawrence Livermore National Laboratory. “Sierra.” Livermore Computing Center. https://hpc.llnl.gov/hardware/platforms/sierra. Accessed November 7, 2018.

- National Nuclear Security Administration and U.S. Department of Energy Office of Science. “ALPINE: Algorithms and Infrastructure for In Situ Visualization and Analysis.” Exascale Computing Project. https://www.exascaleproject.org/project/alpine-algorithms-infrastructure-situ-visualization-analysis. Accessed October 19, 2018.

- Larsen, Matthew James Ahrens, Utkarsh Ayachit, Eric Brugger, Hank Childs, Berk Geveci, and Cyrus Harrison. “The ALPINE In Situ Infrastructure: Ascending from the Ashes of Strawman,” in Proceedings of the In Situ Infrastructures on Enabling Extreme-Scale Analysis and Visualization (New York: ACM, 2017), 42-46. https://doi.org/10.1145/3144769.3144778

- Wikimedia. “NASA spinoff technologies.” Wikipedia. October 17, 2018. https://en.wikipedia.org/wiki/NASA_spinoff_technologies.