ITK orient image filter in DNN training augmentation

As part of the Medical Image Quality Assurance (MIQA), an NIH-funded effort, we experimented on an image quality assessment deep neural network (DNN). We want to share lessons learned from our experiment in this blog.

Most but not all of the data we received, the magnetic resonance images (MRIs), had the same anatomical orientation, RAS. So one of the things we tried is to have a consistent anatomical orientation of images during both training and inference, hoping it would improve classification accuracy. It turned out that the exact opposite happened.

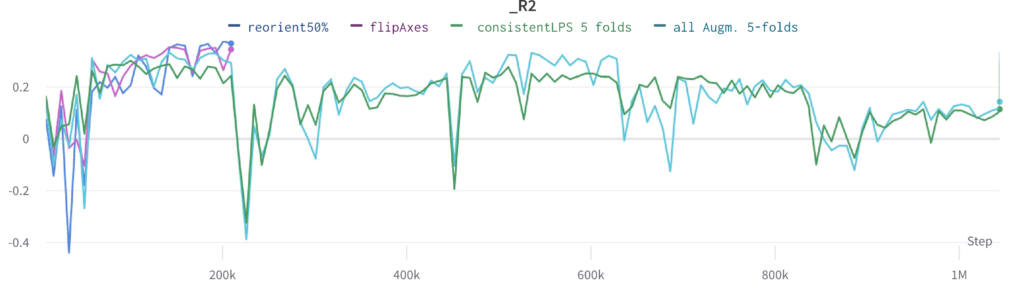

The figure above illustrates the accuracy of four training techniques as the number of training epochs increases; it illustrates a comparison between the effectiveness of each method. The baseline accuracy is with 5 augmentations already applied; see our MIDL 2022 short paper.Using consistent image orientation in training and inference (consistentLPS 5 folds in the figure) decreases accuracy over the baseline (all Augm. 5-folds in the figure). How could this be? Was there a bug in our implementation of consistent anatomical image orientation? No! By orienting images consistently, we remove an implicit augmentation in training data (a variety of anatomical orientations).

To verify this hypothesis, we implemented a new training-only augmentation: randomly flipping axes of the image. This improved the accuracy on the validation data (flipAxes in the figure), thus confirming our hypothesis. Finally, we improved our axis flipping augmentation to do full reorientation (random axis permutations, in addition to random axis flipping). We settled on invoking this augmentation with a 50% probability (reorient50% in the figure).

As we are openly developing MIQA, all of the source code is open. Feel free to examine it and adapt it to your use case. In particular, a direct link to the star of this article: random image reorientation. Also of interest: Doxygen documentation of itk::OrientImageFilter.