Introducing Stereo Camera Measurements in VIAME / DIVE

A bit of Context

In order to allow professional fishermen to fish more responsibly and to protect marine ecosystems, IFREMER (the French institute for Sea Exploitation) has initiated a project called Game of Trawls.

The main goal of this project is to allow trawls to actively select species, which implies a recognition of the species caught in the net during the fishing operation, coupled with an active escape mechanism.

Here is a presentation of it : https://gameoftrawls.ifremer.fr/en/home/

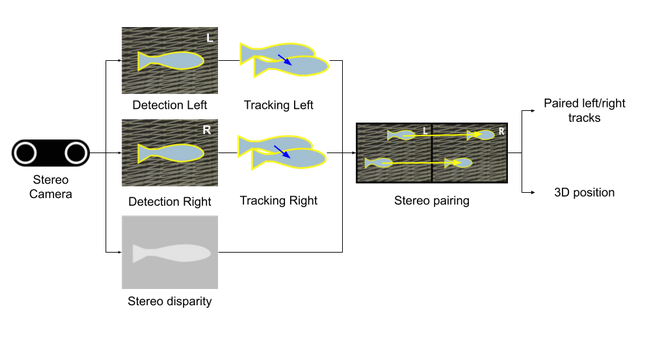

To that end, a setup using a stereo camera has been put in place in order to detect, classify, track and localize accurately each fish independently, so that the escape hatch can be opened when a species that should not be fished is caught in the net.

Below is a picture of the waterproof test setup containing a stereo camera and lighting in a pool used for our tests.

Test setup containing the stereo rig and a net in a swimming pool

Stereo Camera setup and lighting

Fish detection, classification and tracking

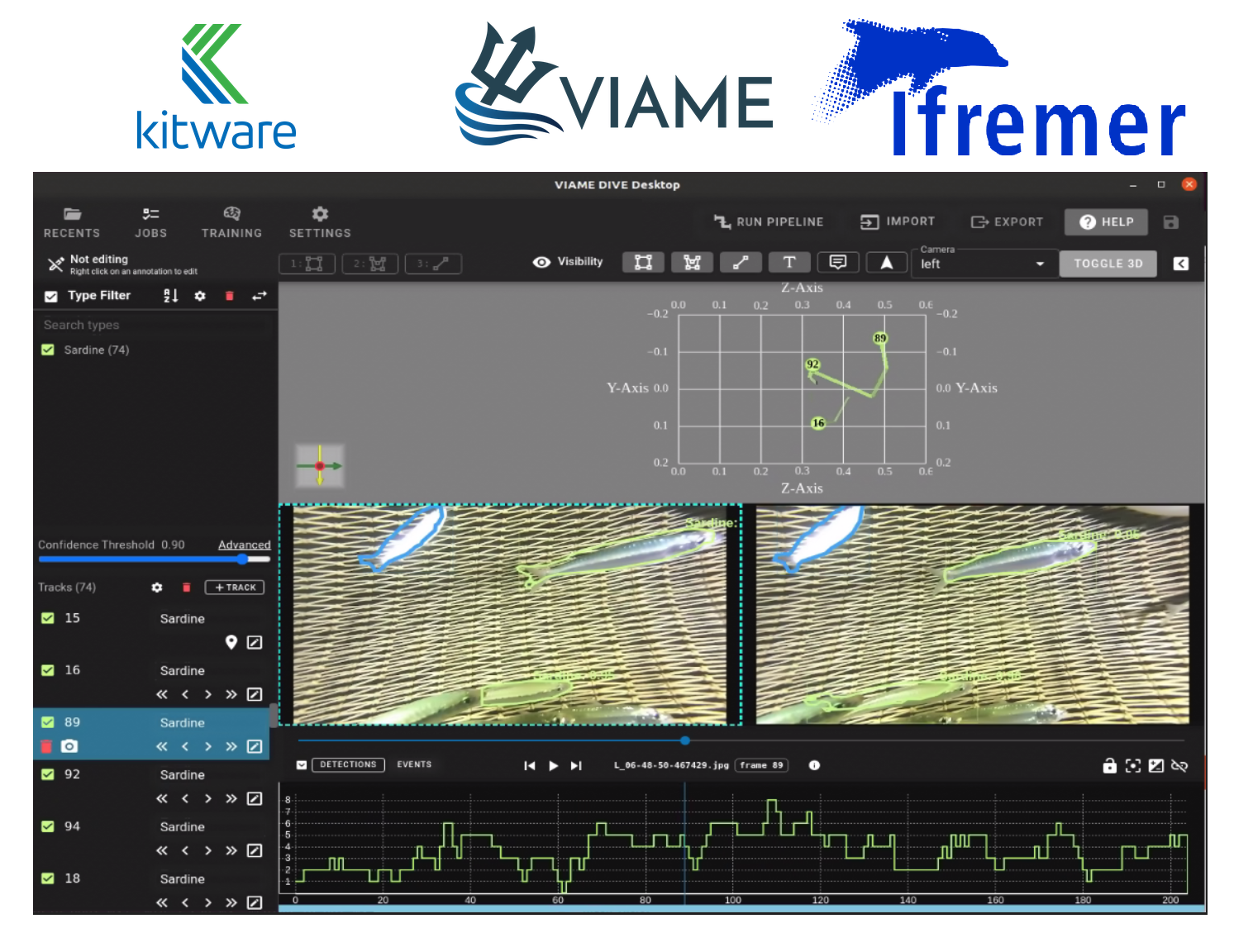

DIVE is a free and open-source annotation and analysis platform for web and desktop developed by Kitware. It has a web deployment and a Desktop app, both leveraging the computer vision toolboxes VIAME and KWIVER.

It permits data annotation at the frame level, or inside the image (using bounding boxes, polygons, or even keypoints), machine learning models training (including deep learning) and running the inference in a unique user-friendly application.

You can find more details about the features offered by DIVE and other front-end applications using VIAME in this quick start guide.

Fish detection and classification

In the context of Game of Trawls, we re-trained models on herring and mackerels in order to detect and classify each individual of each species.Various models have been tested, and in the end we opted for MASK R-CNN which showed the best results and also has the advantage of providing both segmentation and classification results.

Tracking

The tracking for each fish is done independently on each frame using a Structural RNN model from VIAME’s tracking model zoo. The Structural RNN follows, for each frame, the detected fish’s appearance, motion and interactions with other detected fishes, and outputs the consolidated frame to frame fish tracks.

Paired left / right detection of a given herring at frame 0

Paired left / right tracked herring at frame 3 with visible pixel displacement

Pairing

With a calibrated stereo camera rig, along with pixel-wise association, we are able to compute a depth for each pixel in the common field of view of both cameras. This can be used to further increase the accuracy of the tracking, and add more information like 3D tracks of the fishes.

In this particular case, the calibration process must be done underwater (with a refraction index similar to the environment in which the stereo matching will be performed). We therefore added within VIAME the possibility to have a stereo calibration pipeline.

To do so, we used OpenCV for stereo calibration, and optimized its usage for a collection of images.

Once the stereo camera system has been correctly calibrated, we rectify the images to virtually align them and simplify the feature matching between left and right images process as correspondences are then to be found on the same image line.

We then made use of semi global matching techniques to produce a dense disparity map, which can easily be translated into a depth map.

Therefore, for each detection, we are able to accumulate depth information and derive a 3D position and bounding box for each fish. This results in the following overall pipeline.

Implemented algorithm pipeline

Through this pipeline of detection, tracking and stereo pairing, we are able to estimate a trajectory for each individual, resulting in a more robust counting and opening the way to behavioral analysis of each individual and characterization for each species

3D visualization of fish tracks

What’s next ?

There is still a lot of work to be done for species classification and automatic reduction of bycatch of marine species (fishes and marine mammals).

Embedding those algorithms in portable setups is also a crucial part of it so that it can be acquired at acceptable prices and put in place with ease by the fisherman companies.

Regulations shall come and will need standardized measurement systems that make sure future professional fishermen apply sustainable practices.

Improving detection, classification and measurement tool will help to that endeavor, and we believe at Kitware that open source is an important part in the development of such tools to have an approach that is as transparent and auditable by everyone concerned in those topics (public agencies emitting regulations, fisherman companies and Computer Vision developers from research laboratories or companies developing the tools).

Those approaches are also not restricted to the marine environment but can be much broader to easily annotate datasets, train neural networks for your specific tasks and run the inference on other images, all in one app. Let us know if you are interested in using this toolchain for your use cases, and contact us to see how we can start working together and use those solutions for your business case.

Wanna try it for yourself ?

You can use VIAME on your own data directly on the web at https://viame.kitware.com/#/

This work has been funded by The French Research Institute for Exploitation of the Sea (IFREMER)

VIAME was originally developed with funding from the National Oceanic and Atmospheric Administration (NOAA)’s Fisheries Strategic Initiative on Automated Image Analysis (AIASI) committee, in addition to other groups within NOAA, such as the Marine Mammals Lab, and external groups such as the Coonamessett Farm Foundation.