Exascale Computing Project – A Personal Summary

We have made it! After 6 years of hard work, the DOE data and visualization community has successfully developed and deployed a high-quality ecosystem to support DOE’s data analysis and visualization needs on exascale supercomputers. In this series of blogs, I am going to present several projects that Kitware has been involved in on the road to exascale. Hopefully, I will be able to bring some friends as guest authors as well.

Let’s start with a bit of history. The Exascale Computing Project (ECP) is a DOE-led effort to build and deploy the first exaflop supercomputers and to develop/improve the software to run on these systems. For a much more detailed description of the project, see its about page. ECP started 7 years ago with a majority of the software projects following a year after, starting in 2017. The project has supported two sets of software projects: applications (the simulation code to model phenomena DOE is interested in, DOE’s bread and butter) and software projects that support these applications.

We at Kitware have been involved in several ECP software projects. In this series, I will focus on projects under the Data Analysis and Visualization (DAV) portfolio. The ECP DAV projects were managed by Jim Ahrens from Los Alamos National Laboratory. DAV projects were multi-institutional affairs, each managed by their project leads. The DAV projects that we have been involved in are the following:

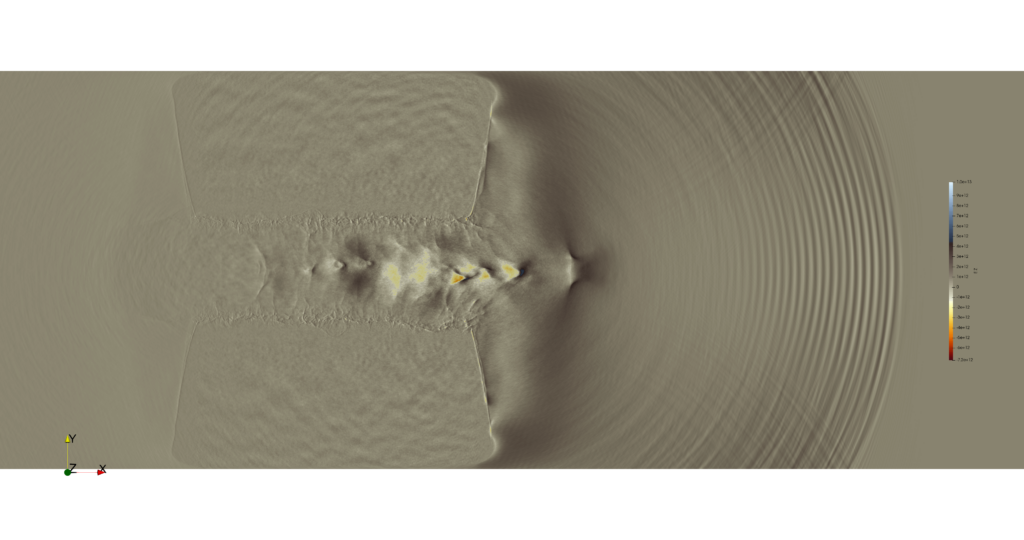

Alpine: The Alpine project has focused on delivering data analysis and visualization capabilities for the exascale era. To this end, we worked on developing the exascale analysis and visualization infrastructure and researching and implementing algorithms that work well in this new paradigm. We developed two in situ infrastructures, Ascent and Catalyst as well as several in situ algorithms ranging from flow analysis to feature tracking. We have also supported and improved post-hoc tools, ParaView and VisIt. Integrating these into the workflow of ECP applications such as MFIX, WarpX, and ExaWind has been a prime objective.

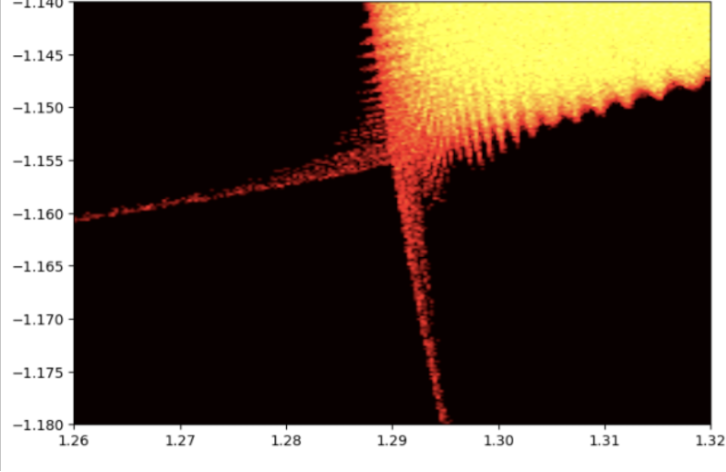

VTK-m: As its name hopefully implies, the ECP VTK-m project focused on developing VTK-m. VTK-m is a performance portable (runs on various CPUs and GPUs and offers comparable performance to natively optimized code) library for developing data analysis and visualization algorithms, especially focused on scientific data. This project has taken many twists and turns as the requirements for GPUs to support have changed (first NVIDIA/CUDA with Summit, then AMD/HIP and Intel/Sycl). VTK-m works well with various backends including Kokkos and supports all major CPUs and GPUs. It is integrated with major visualization and data platforms including ParaView, VisIt, Ascent, Catalyst/ParaView, and ADIOS. It was scaled up to thousands of GPUs.

ADIOS: The ADIOS project has transformed the ADIOS library into a production-quality software package and extended it in many ways to support exascale computing (now called ADIOS2). Quoting from the ECP website “ADIOS is designed to tackle data management challenges posed by large-scale science applications that run on high-performance computers and require, for example, code-to-code coupling for multiphysics and multiscale applications and code-to-service coupling for data analysis and visualization.” Personally, ADIOS is my favorite IO library used both from C++ and Python. Its API is very intuitive and it performs very well. I will write more blogs on my ADIOS experience in the future.

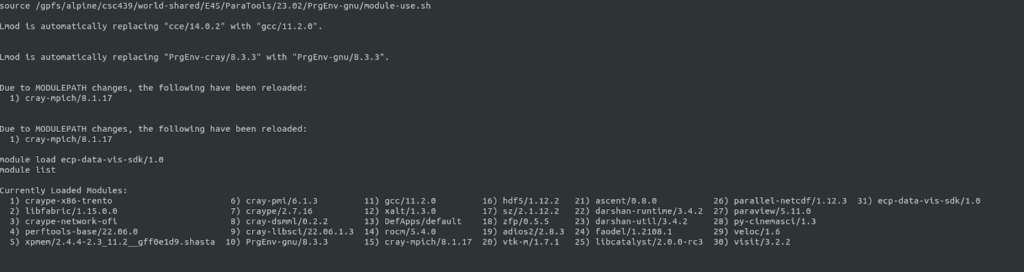

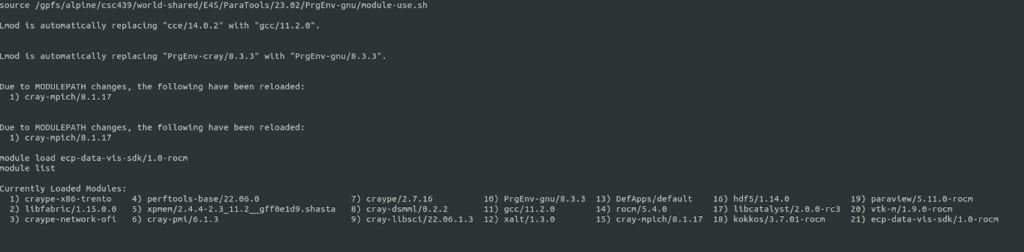

DAV SDK: This project is the glue that brings together the ECP Data Analysis and Visualization portfolio. It is responsible for assisting and sometimes more in the development of the Spack build and CI infrastructure, making sure that the software packages are interoperable (a very hard job given the massive number of dependencies that DAV packages have), that they are integration test, and that they are integrated into the ECP distributions called E4S. DAV SDK has successfully deployed the DAV portfolio on Frontier!