International Geoscience and Remote Sensing Symposium (IGARSS)

Hosted by IEEE

July 16-21, 2023 at the Pasadena Convention Center in Pasadena, CA.

IGARSS is the main conference of the IEEE Geoscience and Remote Sensing Society (GRSS), attracting many remote sensing researchers and engineers from academia, industry, and government. The symposium covers a wide range of geoscience and remote sensing topics from AI and Big Data, SAR Imaging and Processing, Data Analysis, Cryosphere/Land/Atmospheres/Oceans Applications, Sensors, Mission, Instruments, and Calibration. Kitware has a history of participating at IGARSS as a leader in the processing of satellite imagery.

Kitware’s Computer Vision Team is a leader in creating cutting-edge algorithms and software for automated image and video analysis, with more than two centuries of combined experience across our team in AI/ML and computer vision R&D. Our solutions have made a positive impact on government agencies, commercial organizations, and academic institutions worldwide. Join our team to enjoy numerous benefits, including support to publish your novel work, attending national and international conferences, and more (you can see all of our benefits by visiting kitware.com/careers).

Kitware’s Activities and Involvement at IGARSS 2023

Novel Object Detection in Remote Sensing Imagery

Thursday, July 20, from 1:00-1:12 PM | TH3.R1: Acquisition and Geometrical Learning for Object Detection | Room B

Authors: Dawei Du, Ph.D. (Kitware), Anthony Hoogs, Ph.D. (Kitware), Katarina Doctor (US Naval Research Lab), Christopher Funk, Ph.D. (Kitware)

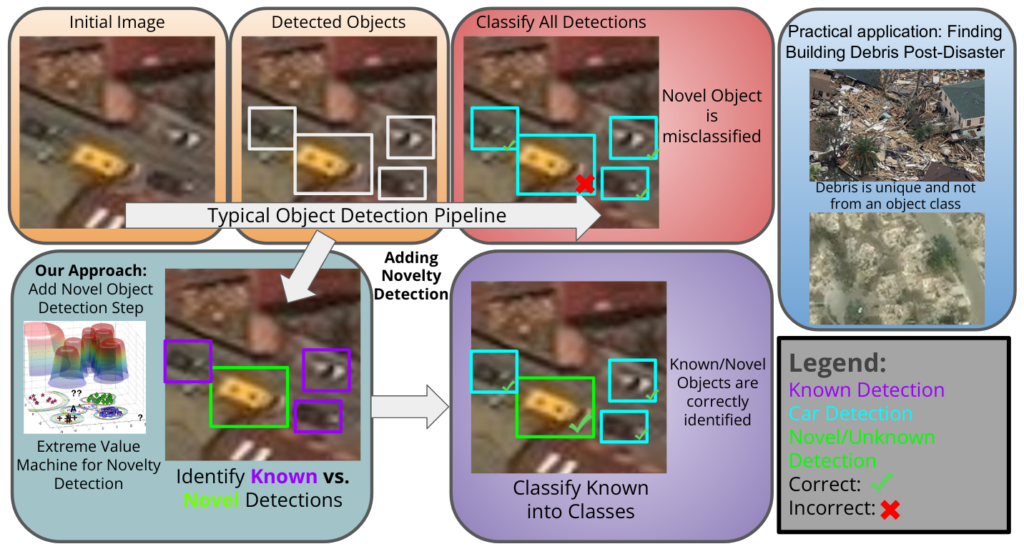

Novel object detection in remote sensing is challenging due to small objects, background clutter, and open-set recognition. To discover and identify objects that are not within the set of known classes in training, we developed a novel object detection method based on extreme value theory. First, generic objects are detected using a state-of-the-art object detector. Then, the extreme value model (EVM) is trained based on the features of extracted detections and a classifier trained on the known object classes. Using the EVM, our method characterizes the distribution of outliers within the overall distribution of known classes to estimate the novelty of each detection. If the novelty score is larger than a threshold, we predict the detection as novel; otherwise the sample is classified by the original object detector. In our experiments, we train a detector on 42 of the 60 classes in the xView satellite imagery dataset, holding out the other 18 classes as novelties. Results on detecting instances of these 18 classes as novelties show the effectiveness of our proposed approach compared to the baseline method of softmax thresholding.

Watch: An Open Source Platform to Monitor Wide Areas for Terrestrial Change

Wednesday, July 19, from 4:21-4:33 PM | WE4.R2: Searching for Activities from Heterogeneous EO Satellite Sensors | Room C

Authors: Matt Leotta, Ph.D. (Kitware), Jon Crall, Ph.D. (Kitware), Connor Greenwell, Ph.D. (Kitware)

Code Link: https://gitlab.kitware.com/computer-vision/geowatch

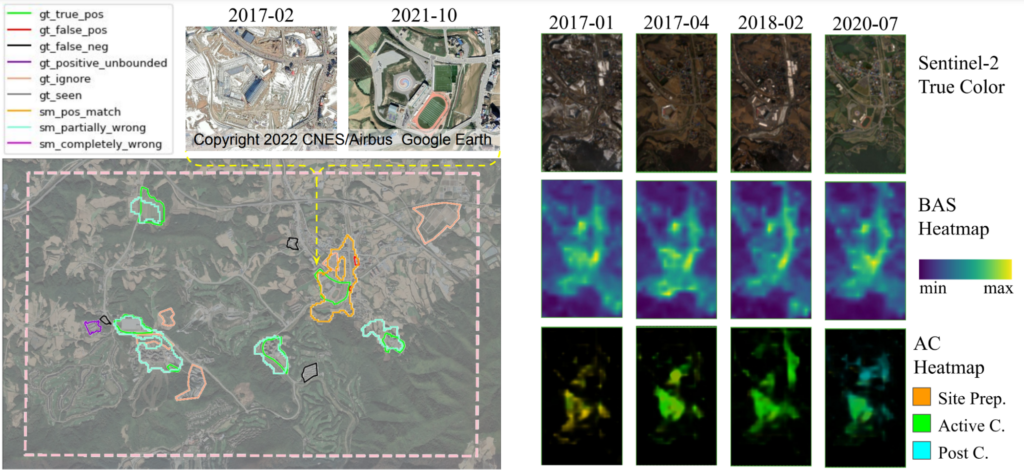

The expansion of available satellite imagery sources in recent years has enhanced global monitoring of our planet. The Earth is constantly changing, but we aim to efficiently sift through incidental change (weather, seasonal variation, etc.) and conduct broad area search (BAS) for sites with specific anthropogenic activities, like building construction. Through activity characterization (AC), we further monitor those sites as they progress through stages of activity over time. Recent advances in artificial intelligence (AI) provide powerful tools for searching through massive spatio-temporal data cubes. However, AI methods typically expect homogeneous data sources. Exploiting data from multiple satellite sources provides useful complementary information but results in a very heterogeneous data cube. For example, Sentinel-2 has moderate resolution and frequent revisits while WorldView 3 has much higher resolution but with less frequent and irregular revisits.

To address these challenges, we have developed the WATCH (Wide Area Terrestrial Change) system. WATCH is an open source software platform for applying AI to detect and characterize activities in large volumes of heterogeneous satellite imagery. The WATCH framework provides advanced geo-spatial data loading capabilities that produce AI-ready data volumes by dynamic alignment, spatial resampling, band selection, and normalization. The framework solves BAS and AC problems by training deep networks on this heterogeneous data. Our approach derives intermediate features from the source imagery as additional inputs to fusion networks trained to address our BAS and AC tasks. In particular, we developed and evaluated the WATCH system using the IARPA SMART problem of detecting heavy construction sites in Landsat, Sentinel-2, WorldView, and PlanetScope imagery.

Resonant Geodata: An Open Source Geospatial Data Management, and Visualization Platform

Thursday, July 20, from 2:15-3:45 PM | THP.P14: Data Management Systems and Computing Platforms in Remote Sensing II | Poster Area 14

Aashish Chaudhary (Kitware), Michael VanDenburgh (Kitware), Bane Sullivan (Kitware), Yonatan Gefen (Kitware), Matt Leotta, Ph.D. (Kitware)

Code Link: https://github.com/ResonantGeoData/ResonantGeoData

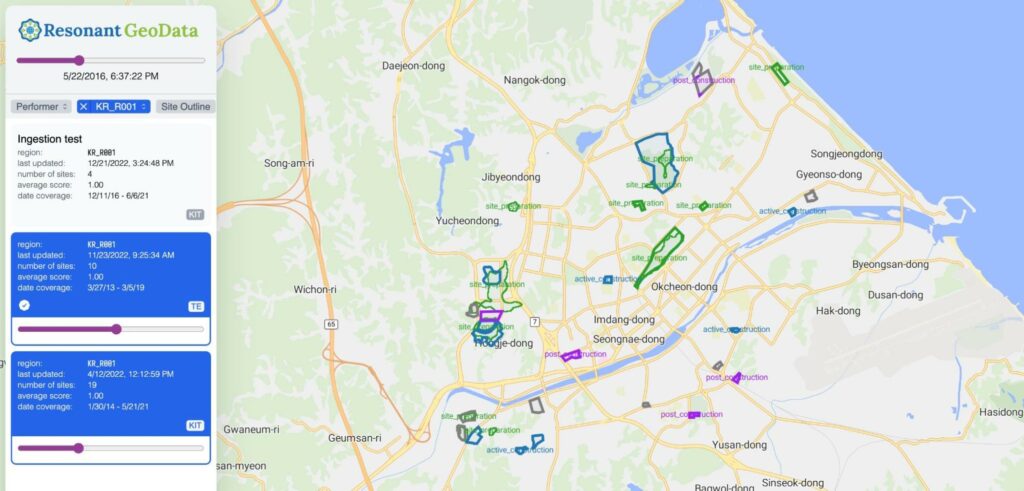

The availability of global satellite imagery has given rise to new applications ranging from tracking deforestation to monitoring crops. However, these data are typically stored and hosted in independent databases or cloud providers, which means that end applications have to work with multiple systems and correlate data across sources. Often this data needs to be analysis-ready, meaning that data needs to be processed and appropriately cropped for the downstream application. Finally, data is used in multiple contexts. Data for the visualization needs to be in a tiled format to be displayed efficiently on the map, but the data for the AI model’s consumption needs to be a particular resolution and size. Supporting different requirements of the end applications in a manner that is manageable and scaled is a hard problem.

To this end, we are building an open-source data management system, Resonant GeoData (RGD), to support AI model development and visualization of geospatial and georeferenced datasets. Resonant GeoData is a geospatial cataloging service providing data indexing, metadata extraction, organization, and RESTful endpoints for searching and serving 2D and 3D data directly from distributed cloud storage locations. RGD is being actively developed using open-source tools and open specifications such as OpenAPI and OGC, open standards such as STAC, scalable data processing and database technologies, and best practices for software development. RGD is currently enabling secure geospatial, and georeferenced data access and faster turnaround for developing broad area search algorithms using a heterogeneous mix of satellite imagery sources. These broad area search algorithms aim to detect and characterize anthropogenic changes for the IARPA SMART program. RGD provides a continually evolving WebUI with a base vector tile map server and an API for annotators.

Kitware’s Computer Vision Work

Kitware is a leader in Remote Sensing AI, machine learning, and computer vision. We have expertise in all areas of computer vision, such as 3D reconstruction, complex activity detection, object detection, and tracking, as well as emerging areas, such as explainable and ethical AI, interactive do-it-yourself AI, and cyber-physical systems. Kitware’s Computer Vision Team also develops robust lidar technology solutions for autonomy, driver assistance, mapping, and more. To learn more about Kitware’s computer vision work, you can email us at computervision@kitware.com.

Physical Event

Pasadena Convention Center

Pasadena, CA

Virtual Location