The IEEE/CVF Conference on Computer Vision and Pattern Recognition 2023 (CVPR)

June 18-22, 2023 at the Vancouver Convention Center in Vancouver, Canada

Kitware has an extensive history of participating at CVPR as a leader in the computer vision community. As the premier annual computer vision event in the world, Kitware is proud to be an exhibitor and Silver sponsor of CVPR 2023.

Join one of the most recognized and credentialed providers of advanced computer vision research across the DoD and IC

Kitware’s Computer Vision Team is a leader in creating cutting-edge algorithms and software for automated image and video analysis, with more than two centuries of combined experience across our team in AI/ML and computer vision R&D. Our solutions have made a positive impact on government agencies, commercial organizations, and academic institutions worldwide.

Join our team to enjoy numerous benefits, including support to publish your novel work, the ability to attend national and international conferences, and more (you can see all of our benefits by visiting kitware.com/careers). If you’re interested in meeting with us to discuss our open positions, please complete this form. You can also stop by our booth #1432 for more information.

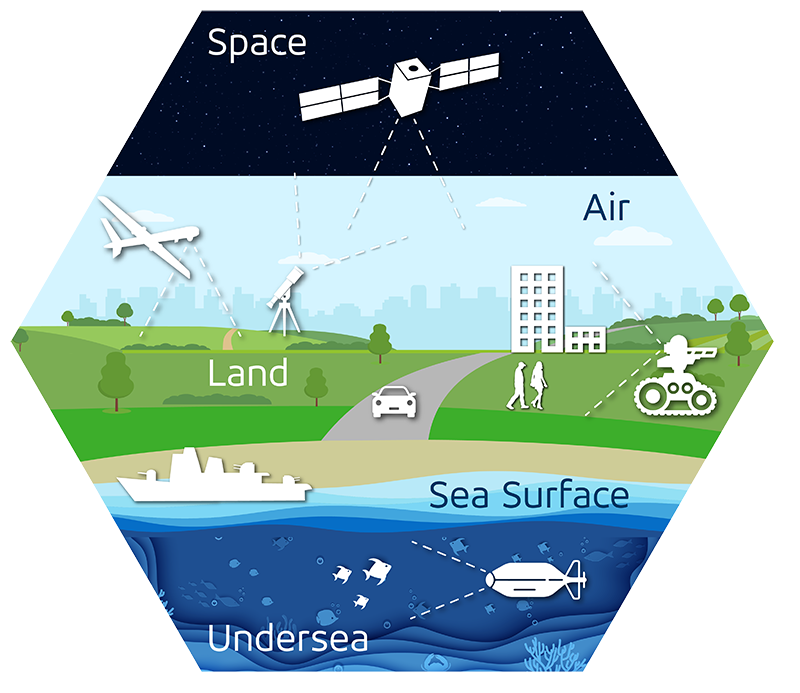

Harnessing the power of AI and ML across all domains

Are you ready to make a difference in the world?

Kitware is Hiring

Computer Vision Researcher – U.S. Citizens

(Clifton Park NY, Carrboro NC, Arlington VA, Minneapolis MN, Remote)

Conduct research and develop robust solutions in the areas of object/activity detection/recognition, motion pattern learning, anomaly detection, open-world learning, image forensics, explainable/ethical AI, and more.

Computer Vision Researcher

Carrboro NC, Arlington VA, Minneapolis MN, Remote

Conduct research and develop robust solutions in the areas of object/activity detection/recognition, motion pattern learning, anomaly detection, open-world learning, image forensics, explainable/ethical AI, and more. Open to non-U.S. citizens.

3D Computer Vision Researcher

(Clifton Park NY, Carrboro NC, Arlington VA, Minneapolis MN, Remote)

Conduct research and develop solutions for problems related to camera calibration, registration, structure from motion, neural rendering, neural implicit surfaces, surface meshing, and more.

Technical Leader of Natural Language Processing

(Clifton Park NY, Carrboro NC, Arlington VA, Minneapolis MN, Remote)

Lead research efforts with an emphasis on Natural Language Processing supported by artificial intelligence, machine learning, and deep learning and often in connection with computer vision. Have technical expertise and project management skills to lead teams of researchers, developers, and external collaborators to meet challenges in complex research programs. Lead commercial and federal business development activities to win R&D funding.

Technical Leader of Computer Vision

(Clifton Park NY, Carrboro NC, Remote)

Lead research efforts with an emphasis on computer vision supported by artificial intelligence, machine learning, and deep learning. Have technical expertise and project management skills to lead teams of researchers, developers, and external collaborators to meet challenges in complex research programs. Lead commercial and federal business development activities to win R&D funding.

Machine Learning Engineer

(Clifton Park NY, Carrboro NC, Arlington VA, Minneapolis MN)

Collaborate with researchers on computer vision projects to design, implement, train, and test machine learning and artificial intelligence systems to solve real-world problems.

Computer Vision Research Internships

(Clifton Park NY, Carrboro, NC, Arlington VA, Minneapolis MN, Remote)

Collaborate with Kitware computer vision experts for 3-6 months (e.g. Summer 2024), conduct research, and publish results at premier conferences. Open to Ph.D. students in computer vision and related fields. Apply starting Fall 2023.

Events

Earth observation and remote sensing are growing areas of investigation where computer vision, machine learning, and signal/image processing meet. The goals is to provide large-scale, consistent information about the surface of the Earth by exploiting data collected by airborne and spaceborne sensors. This can be used for a broad range of tasks, including detection to registration, data mining, and multi-sensor, multi-resolution, multi-temporal, and multi-modality fusion and regression. This benefits numerous numerous applications, such as location-based services, online mapping services, large-scale surveillance, 3D urban modeling, navigation systems, natural hazard forecast and response, climate change monitoring, virtual habitat modeling, food security, and more. The high volume of data associated with earth observation requires highly automated scene interpretation workflows. This workshop will explore topics related to this, including using super-resolution in the spectral and spatial domain, hyperspectral and multispectral image processing, and reconstruction and segmentation of optical and lidar 3D point clouds. Learn more

Open Set Action Recognition via Multi-Label Evidential Learning

Authors: Chen Zhao, Dawei Du, Anthony Hoogs, Christopher Funk (Kitware)

![Novelty detection examples of single/multiple actor(s) with single/multiple action(s) in video [16, 38], where an actor is identified as novel (yellow) rather than being from a known category (cyan) in inference. Existing works [4, 6] on open set action recognition focus on single actor associated with single action (bottom-left), while our method can handle different situations.](https://www.kitware.com/main/wp-content/uploads/2023/05/image1-1-800x394.png)

Novelty detection examples of single/multiple actor(s) with single/multiple action(s) in video [16, 38], where an actor is identified as novel (yellow) rather than being from a known category (cyan) in inference. Existing works [4, 6] on open set action recognition focus on single actor associated with single action (bottom-left), while our method can handle different situations.

Existing methods for open set action recognition focus on novelty detection that assumes video clips show a single action, which is unrealistic in the real world. Our paper proposes a new method for open set action recognition and novelty detection via MUlti-Label Evidential learning (MULE). MULE goes beyond previous novel action detection methods by addressing the more general problems of single or multiple actors in the same scene with simultaneous action(s) by any actor. Our Beta Evidential Neural Network estimates multi-action uncertainty with Beta densities based on actor-context-object relation representations. An evidence debiasing constraint is added to the objective function for optimization to reduce the static bias of video representations, which can incorrectly correlate predictions and static cues. We develop a primal-dual average scheme update-based learning algorithm to optimize the proposed problem and provide corresponding theoretical analysis. Besides, uncertainty and belief-based novelty estimation mechanisms are formulated to detect novel actions. Extensive experiments on two real-world video datasets show that our proposed approach achieves promising performance in single/multi-actor, single/multi-action settings.

Kitware’s Computer Vision Expertise Areas

Generative AI

Through our extensive experience in AI and our early adoption of deep learning, we have made significant contributions to object detection, recognition, tracking, activity detection, semantic segmentation, and content-based retrieval for computer vision. With recent shifts in the field from predictive AI to generative AI (or genAI), we are leveraging new technologies such as large language models (LLMs) and multi-modal foundation models that operate on both visual and textual inputs. On the DARPA ITM program, we have developed sample-efficient methods to adapt LLMs for human-aligned decision-making in the medical triage domain.

Dataset Collection and Annotation

The growth in deep learning has increased the demand for quality, labeled datasets needed to train models and algorithms. The power of these models and algorithms greatly depends on the quality of the training data available. Kitware has developed and cultivated dataset collection, annotation, and curation processes to build powerful capabilities that are unbiased and accurate, and not riddled with errors or false positives. Kitware can collect and source datasets and design custom annotation pipelines. We can annotate image, video, text and other data types using our in-house, professional annotators, some of whom have security clearances, or leverage third-party annotation resources when appropriate. Kitware also performs quality assurance that is driven by rigorous metrics to highlight when returns are diminishing. All of this data is managed by Kitware for continued use to the benefit of our customers, projects, and teams. Data collected or curated by Kitware includes the MEVA activity and MEVID person re-identification datasets, the VIRAT activity dataset, and the DARPA Invisible Headlights off-road autonomous vehicle navigation dataset.

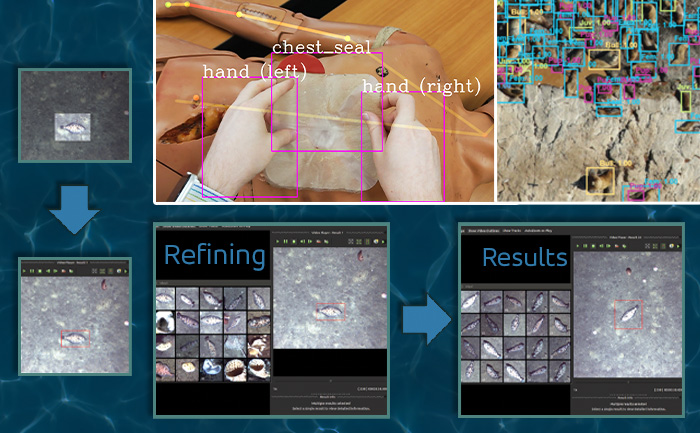

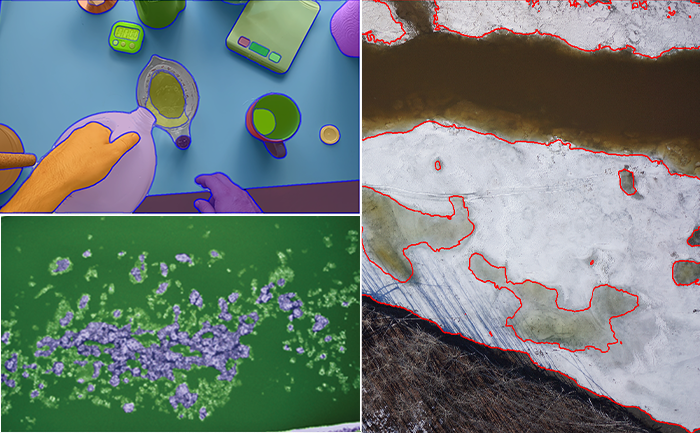

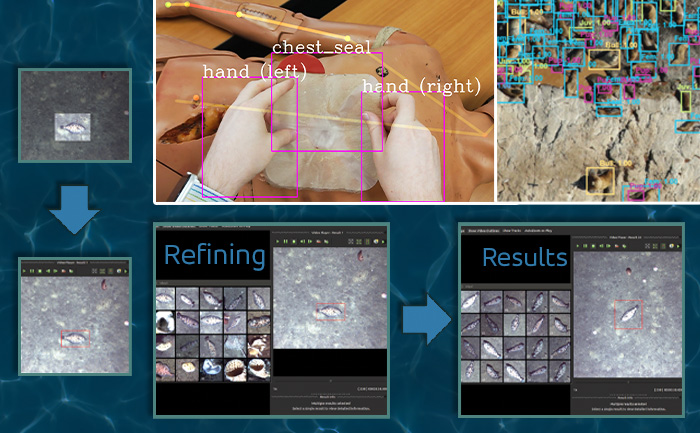

Interactive Artificial Intelligence and Human-Machine Teaming

DIY AI enables end users – analysts, operators, engineers – to rapidly build, test, and deploy novel AI solutions without having expertise in machine learning or even computer programming. Using Kitware’s interactive DIY AI toolkits, you can easily and efficiently train object classifiers using interactive query refinement, without drawing any bounding boxes. You are able to interactively improve existing capabilities to work optimally on your data for tasks such as object tracking, object detection, and event detection. Our toolkits also allow you to perform customized, highly specific searches of large image and video archives powered by cutting-edge AI methods. Currently, our DIY AI toolkits, such as VIAME, are used by scientists to analyze environmental images and video. Our defense-related versions are being used to address multiple domains and are provided with unlimited rights to the government. These toolkits enable long-term operational capabilities even as methods and technology evolve over time.

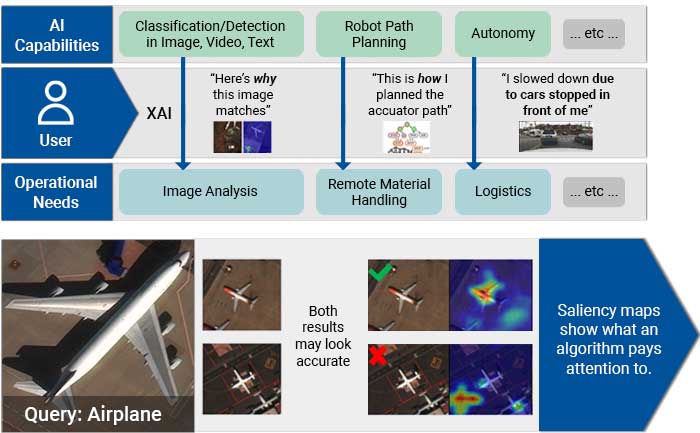

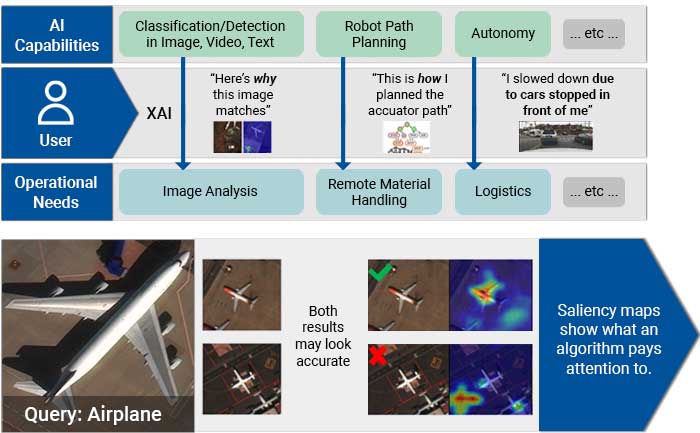

Explainable and Ethical AI

Integrating AI via human-machine teaming can greatly improve capabilities and competence as long as the team has a solid foundation of trust. To trust your AI partner, you must understand how the technology makes decisions and feel confident in those decisions. Kitware has developed powerful tools, such as the Explainable AI Toolkit (XAITK), to explore, quantify, and monitor the behavior of deep learning systems. Our team is also making deep neural networks more robust when faced with previously-unknown conditions, by leveraging AI test and evaluation (T&E) tools such as the Natural Robustness Toolkit (NRTK). In addition, our team is stepping outside of classic AI systems to address domain independent novelty identification, characterization, and adaptation to be able to acknowledge the introduction of unknowns. We also value the need to understand the ethical concerns, impacts, and risks of using AI. That’s why Kitware is developing methods to understand, formulate and test ethical reasoning algorithms for semi-autonomous applications. Kitware is proud to be part of the AISIC, a U.S. Department of Commerce Consortium dedicated to advancing the development and deployment of safe, trustworthy AI.

Combatting Disinformation

In the age of disinformation, it has become critical to validate the integrity and veracity of images, video, audio, and text sources. For instance, as photo-manipulation and photo-generation techniques are evolving rapidly, we continuously develop algorithms to detect, attribute, and characterize disinformation that can operate at scale on large data archives. These advanced AI algorithms allow us to detect inserted, removed, or altered objects, distinguish deep fakes from real images or videos, and identify deleted or inserted frames in videos in a way that exceeds human performance. We continue to extend this work through multiple government programs to detect manipulations in falsified media exploiting text, audio, images, and video.

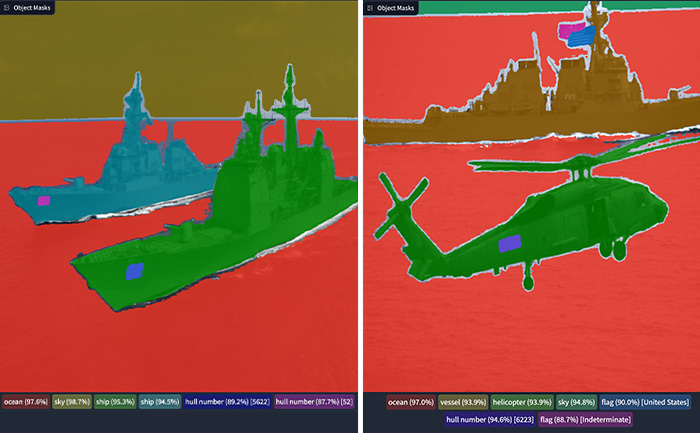

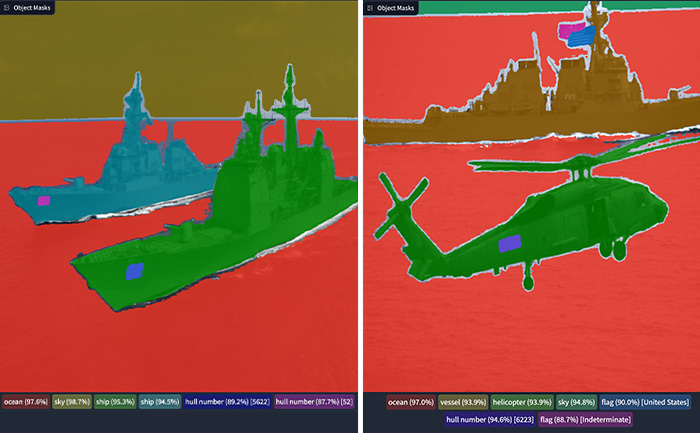

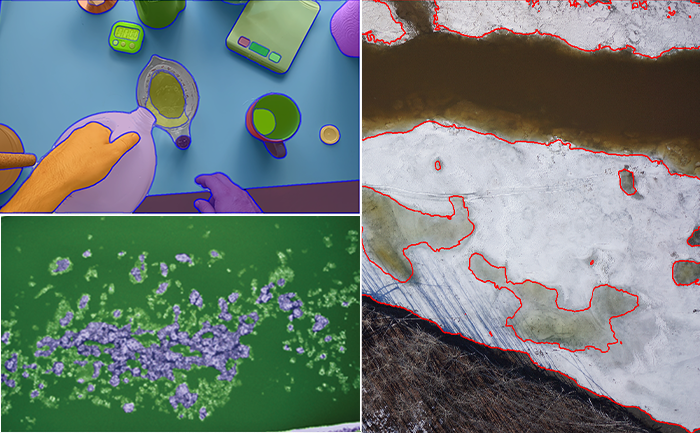

Semantic Segmentation

Kitware’s knowledge-driven scene understanding capabilities use deep learning techniques to accurately segment scenes into object types. In video, our unique approach defines objects by behavior, rather than appearance, so we can identify areas with similar behaviors. Through observing mover activity, our capabilities can segment a scene into functional object categories that may not be distinguishable by appearance alone. These capabilities are unsupervised so they automatically learn new functional categories without any manual annotations. Semantic scene understanding improves downstream capabilities such as threat detection, anomaly detection, change detection, 3D reconstruction, and more.

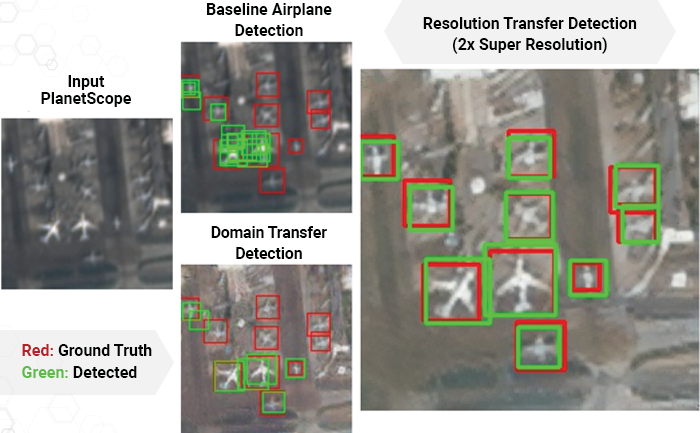

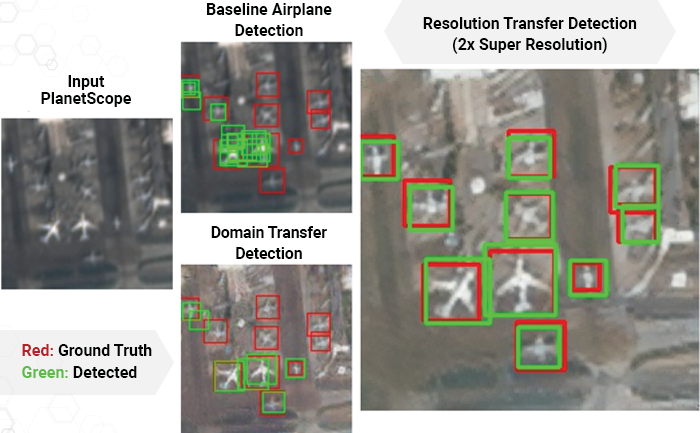

Super Resolution and Enhancement

Images and videos often come with unintended degradation – lens blur, sensor noise, environmental haze, compression artifacts, etc., or sometimes the relevant details are just beyond the resolution of the imagery. Kitware’s super-resolution techniques enhance single or multiple images to produce higher-resolution, improved images. We use novel methods to compensate for widely spaced views and illumination changes in overhead imagery, particulates and light attenuation in underwater imagery, and other challenges in a variety of domains. Our experience includes both powerful generative AI methods and simpler data-driven methods that avoid hallucination. The resulting higher-quality images enhance detail, enable advanced exploitation, and improve downstream automated analytics, such as object detection and classification.

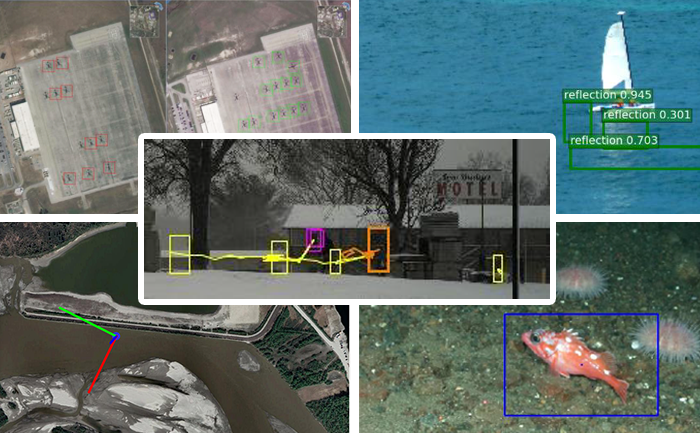

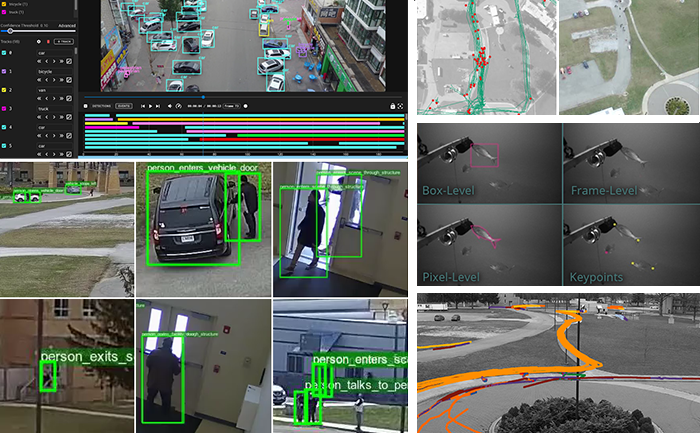

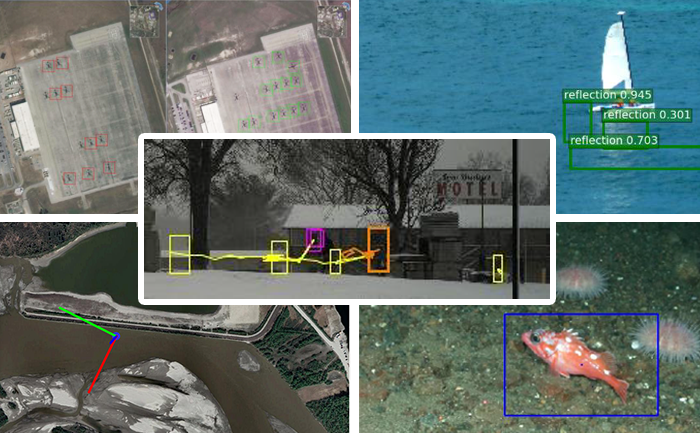

Object Detection and Classification

Our video object detection and tracking tools are the culmination of years of continuous government investment. Deployed operationally in various domains, our mature suite of trackers can identify and track moving objects in many types of intelligence, surveillance, and reconnaissance data (ISR), including video from ground cameras, aerial platforms, underwater vehicles, robots, and satellites. These tools are able to perform in challenging settings and address difficult factors, such as low contrast, low resolution, moving cameras, occlusions, shadows, and high traffic density, through multi-frame track initialization, track linking, reverse-time tracking, recurrent neural networks, and other techniques. Our trackers can perform difficult tasks including ground camera tracking in congested scenes, real-time multi-target tracking in full-field WAMI and OPIR, and tracking people in far-field, non-cooperative scenarios.

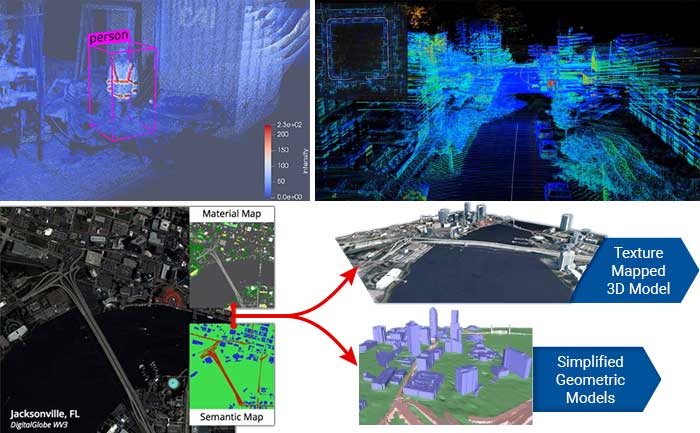

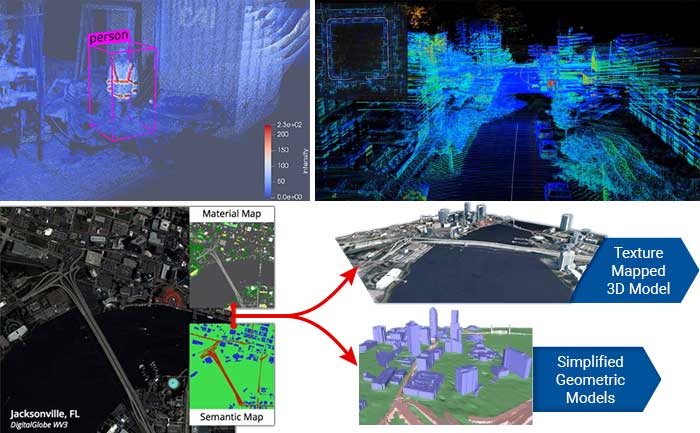

3D Reconstruction, Point Clouds, and Odometry

Kitware’s algorithms can extract 3D point clouds and surface meshes from video or images, without metadata or calibration information, or exploiting it when available. Operating on these 3D datasets or others from LiDAR and other depth sensors, our methods estimate scene semantics and 3D reconstruction jointly to maximize the accuracy of object classification, visual odometry, and 3D shape. Our open source 3D reconstruction toolkit, TeleSculptor, is continuously evolving to incorporate advancements to automatically analyze, visualize, and make measurements from images and video. LiDARView, another open source toolkit developed specifically for LiDAR data, performs 3D point cloud visualization and analysis in order to fuse data, techniques, and algorithms to produce SLAM and other capabilities.

Cyber-Physical Systems

The physical environment presents a unique, ever-changing set of challenges to any sensing and analytics system. Kitware has designed and constructed state-of-the-art cyber-physical systems that perform onboard, autonomous processing to gather data and extract critical information. Computer vision and deep learning technology allow our sensing and analytics systems to overcome the challenges of a complex, dynamic environment. They are customized to solve real-world problems in aerial, ground, and underwater scenarios. These capabilities have been field-tested and proven successful in programs funded by R&D organizations such as DARPA, AFRL, and NOAA.

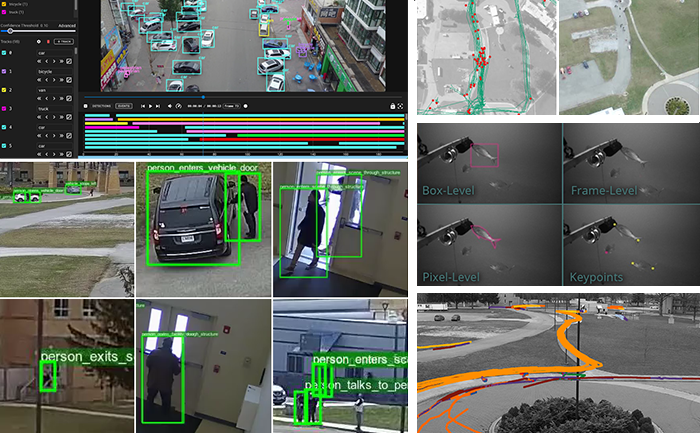

Complex Activity, Event, and Threat Detection

Kitware’s tools detect high-value events, behaviors, and anomalies by analyzing low-level actions and events in complex environments. Using data from sUAS, fixed security cameras, WAMI, FMV, MTI, and various sensing modalities such as acoustic, Electro-Optical (EO), and Infrared (IR), our algorithms recognize actions like picking up objects, vehicles starting/stopping, and complex interactions such as vehicle transfers. We leverage both traditional computer vision deep learning models and Vision-Language Models (VLMs) for enhanced scene understanding and context-aware activity recognition. For sUAS, our tools provide precise tracking and activity analysis, while for fixed security cameras, they monitor and alert on unauthorized access, loitering, and other suspicious behaviors. Efficient data search capabilities support rapid identification of threats in massive video streams, even with detection errors or missing data. This ensures reliable activity recognition across a variety of operational settings, from large areas to high-traffic zones.

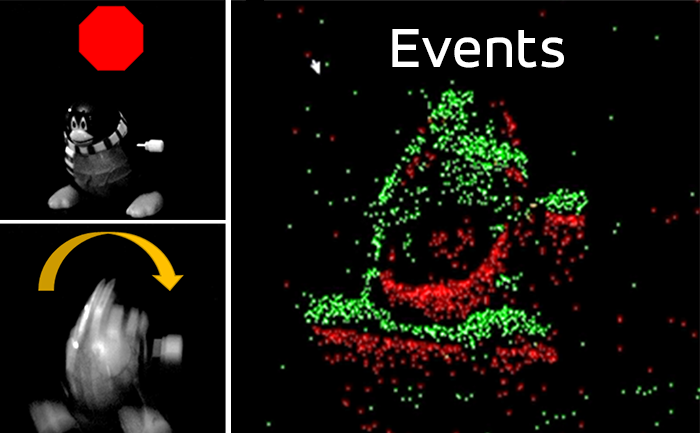

Computational Imaging

The success or failure of computer vision algorithms is often determined upstream, when images are captured with poor exposure or insufficient resolution that can negatively impact downstream detection, tracking, or recognition. Recognizing that an increasing share of imagery is consumed exclusively by software and may never be presented visually to a human viewer, computational imaging approaches co-optimize sensing and exploitation algorithms to achieve more efficient, effective outcomes than are possible with traditional cameras. Conceptually, this means thinking of the sensor as capturing a data structure from which downstream algorithms can extract meaningful information. Kitware’s customers in this growing area of emphasis include IARPA, AFRL, and MDA, for whom we’re mitigating atmospheric turbulence and performing recognition on unresolved targets for applications such as biometrics and missile detection.

Generative AI

Through our extensive experience in AI and our early adoption of deep learning, we have made significant contributions to object detection, recognition, tracking, activity detection, semantic segmentation, and content-based retrieval for computer vision. With recent shifts in the field from predictive AI to generative AI (or genAI), we are leveraging new technologies such as large language models (LLMs) and multi-modal foundation models that operate on both visual and textual inputs. On the DARPA ITM program, we have developed sample-efficient methods to adapt LLMs for human-aligned decision-making in the medical triage domain.

Dataset Collection and Annotation

The growth in deep learning has increased the demand for quality, labeled datasets needed to train models and algorithms. The power of these models and algorithms greatly depends on the quality of the training data available. Kitware has developed and cultivated dataset collection, annotation, and curation processes to build powerful capabilities that are unbiased and accurate, and not riddled with errors or false positives. Kitware can collect and source datasets and design custom annotation pipelines. We can annotate image, video, text and other data types using our in-house, professional annotators, some of whom have security clearances, or leverage third-party annotation resources when appropriate. Kitware also performs quality assurance that is driven by rigorous metrics to highlight when returns are diminishing. All of this data is managed by Kitware for continued use to the benefit of our customers, projects, and teams. Data collected or curated by Kitware includes the MEVA activity and MEVID person re-identification datasets, the VIRAT activity dataset, and the DARPA Invisible Headlights off-road autonomous vehicle navigation dataset.

Interactive Artificial Intelligence and Human-Machine Teaming

DIY AI enables end users – analysts, operators, engineers – to rapidly build, test, and deploy novel AI solutions without having expertise in machine learning or even computer programming. Using Kitware’s interactive DIY AI toolkits, you can easily and efficiently train object classifiers using interactive query refinement, without drawing any bounding boxes. You are able to interactively improve existing capabilities to work optimally on your data for tasks such as object tracking, object detection, and event detection. Our toolkits also allow you to perform customized, highly specific searches of large image and video archives powered by cutting-edge AI methods. Currently, our DIY AI toolkits, such as VIAME, are used by scientists to analyze environmental images and video. Our defense-related versions are being used to address multiple domains and are provided with unlimited rights to the government. These toolkits enable long-term operational capabilities even as methods and technology evolve over time.

Explainable and Ethical AI

Integrating AI via human-machine teaming can greatly improve capabilities and competence as long as the team has a solid foundation of trust. To trust your AI partner, you must understand how the technology makes decisions and feel confident in those decisions. Kitware has developed powerful tools, such as the Explainable AI Toolkit (XAITK), to explore, quantify, and monitor the behavior of deep learning systems. Our team is also making deep neural networks more robust when faced with previously-unknown conditions, by leveraging AI test and evaluation (T&E) tools such as the Natural Robustness Toolkit (NRTK). In addition, our team is stepping outside of classic AI systems to address domain independent novelty identification, characterization, and adaptation to be able to acknowledge the introduction of unknowns. We also value the need to understand the ethical concerns, impacts, and risks of using AI. That’s why Kitware is developing methods to understand, formulate and test ethical reasoning algorithms for semi-autonomous applications. Kitware is proud to be part of the AISIC, a U.S. Department of Commerce Consortium dedicated to advancing the development and deployment of safe, trustworthy AI.

Combatting Disinformation

In the age of disinformation, it has become critical to validate the integrity and veracity of images, video, audio, and text sources. For instance, as photo-manipulation and photo-generation techniques are evolving rapidly, we continuously develop algorithms to detect, attribute, and characterize disinformation that can operate at scale on large data archives. These advanced AI algorithms allow us to detect inserted, removed, or altered objects, distinguish deep fakes from real images or videos, and identify deleted or inserted frames in videos in a way that exceeds human performance. We continue to extend this work through multiple government programs to detect manipulations in falsified media exploiting text, audio, images, and video.

Semantic Segmentation

Kitware’s knowledge-driven scene understanding capabilities use deep learning techniques to accurately segment scenes into object types. In video, our unique approach defines objects by behavior, rather than appearance, so we can identify areas with similar behaviors. Through observing mover activity, our capabilities can segment a scene into functional object categories that may not be distinguishable by appearance alone. These capabilities are unsupervised so they automatically learn new functional categories without any manual annotations. Semantic scene understanding improves downstream capabilities such as threat detection, anomaly detection, change detection, 3D reconstruction, and more.

Super Resolution and Enhancement

Images and videos often come with unintended degradation – lens blur, sensor noise, environmental haze, compression artifacts, etc., or sometimes the relevant details are just beyond the resolution of the imagery. Kitware’s super-resolution techniques enhance single or multiple images to produce higher-resolution, improved images. We use novel methods to compensate for widely spaced views and illumination changes in overhead imagery, particulates and light attenuation in underwater imagery, and other challenges in a variety of domains. Our experience includes both powerful generative AI methods and simpler data-driven methods that avoid hallucination. The resulting higher-quality images enhance detail, enable advanced exploitation, and improve downstream automated analytics, such as object detection and classification.

Object Detection and Classification

Our video object detection and tracking tools are the culmination of years of continuous government investment. Deployed operationally in various domains, our mature suite of trackers can identify and track moving objects in many types of intelligence, surveillance, and reconnaissance data (ISR), including video from ground cameras, aerial platforms, underwater vehicles, robots, and satellites. These tools are able to perform in challenging settings and address difficult factors, such as low contrast, low resolution, moving cameras, occlusions, shadows, and high traffic density, through multi-frame track initialization, track linking, reverse-time tracking, recurrent neural networks, and other techniques. Our trackers can perform difficult tasks including ground camera tracking in congested scenes, real-time multi-target tracking in full-field WAMI and OPIR, and tracking people in far-field, non-cooperative scenarios.

3D Reconstruction, Point Clouds, and Odometry

Kitware’s algorithms can extract 3D point clouds and surface meshes from video or images, without metadata or calibration information, or exploiting it when available. Operating on these 3D datasets or others from LiDAR and other depth sensors, our methods estimate scene semantics and 3D reconstruction jointly to maximize the accuracy of object classification, visual odometry, and 3D shape. Our open source 3D reconstruction toolkit, TeleSculptor, is continuously evolving to incorporate advancements to automatically analyze, visualize, and make measurements from images and video. LiDARView, another open source toolkit developed specifically for LiDAR data, performs 3D point cloud visualization and analysis in order to fuse data, techniques, and algorithms to produce SLAM and other capabilities.

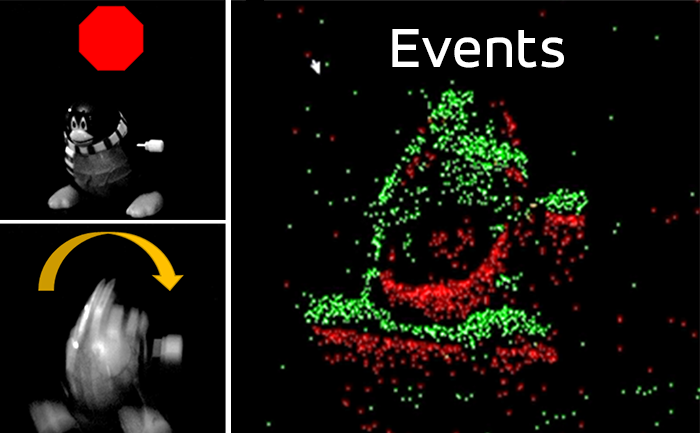

Cyber-Physical Systems

The physical environment presents a unique, ever-changing set of challenges to any sensing and analytics system. Kitware has designed and constructed state-of-the-art cyber-physical systems that perform onboard, autonomous processing to gather data and extract critical information. Computer vision and deep learning technology allow our sensing and analytics systems to overcome the challenges of a complex, dynamic environment. They are customized to solve real-world problems in aerial, ground, and underwater scenarios. These capabilities have been field-tested and proven successful in programs funded by R&D organizations such as DARPA, AFRL, and NOAA.

Complex Activity, Event, and Threat Detection

Kitware’s tools detect high-value events, behaviors, and anomalies by analyzing low-level actions and events in complex environments. Using data from sUAS, fixed security cameras, WAMI, FMV, MTI, and various sensing modalities such as acoustic, Electro-Optical (EO), and Infrared (IR), our algorithms recognize actions like picking up objects, vehicles starting/stopping, and complex interactions such as vehicle transfers. We leverage both traditional computer vision deep learning models and Vision-Language Models (VLMs) for enhanced scene understanding and context-aware activity recognition. For sUAS, our tools provide precise tracking and activity analysis, while for fixed security cameras, they monitor and alert on unauthorized access, loitering, and other suspicious behaviors. Efficient data search capabilities support rapid identification of threats in massive video streams, even with detection errors or missing data. This ensures reliable activity recognition across a variety of operational settings, from large areas to high-traffic zones.

Computational Imaging

The success or failure of computer vision algorithms is often determined upstream, when images are captured with poor exposure or insufficient resolution that can negatively impact downstream detection, tracking, or recognition. Recognizing that an increasing share of imagery is consumed exclusively by software and may never be presented visually to a human viewer, computational imaging approaches co-optimize sensing and exploitation algorithms to achieve more efficient, effective outcomes than are possible with traditional cameras. Conceptually, this means thinking of the sensor as capturing a data structure from which downstream algorithms can extract meaningful information. Kitware’s customers in this growing area of emphasis include IARPA, AFRL, and MDA, for whom we’re mitigating atmospheric turbulence and performing recognition on unresolved targets for applications such as biometrics and missile detection.

Kitware’s Automated Image and Video Analysis Platforms

Start your Career at Kitware

We are leaders in creating cutting-edge algorithms and software for automated image and video analysis. In joining Kitware’s computer vision team, you will be involved in exciting AI and deep learning projects surrounded by experts who are passionate just like you. Take the first step and submit your resume today.