Cinematic Volume Rendering in Your Web Browser: VolView and VTK.js

Introduction

Kitware recently announced the release of VolView 4.0. This post provides technical details on the cinematic volume rendering (CVR) features that are featured in VolView and that are now also available in vtk.js.

CVR provides life-like visualizations by simulating the propagation and interaction of light rays as they pass through volumetric data. Most notably, CVR incorporates statistical models of light scattering that consider the orientations of gradients in the data and lights in the scene.

VolView provides CVR in an intuitive, open-source radiological viewer that runs in your web browser. You can drag-and-drop DICOM files to quickly generate interactive, photorealistic renderings of medical data. Additionally, VolView provides standard radiological measurement and annotation tools, and new capabilities such as support for DICOMWeb and AI inference are already under development.

vtk.js is Kitware’s open-source javascript library for scalable, web-based scientific visualization. It runs on phones, tablets, laptops, desktops, and cloud servers. It takes advantage of GPU acceleration when available. It is designed to work with other web-based scientific tools such as itk.wasm that provides DICOM I/O, image segmentation, and image registration capabilities and MONAI that provides medical data deep learning capabilities.

In this blog, we focus on the technology of CVR. We present evaluations of the performance of the vtk.js CVR methods on a variety of platforms and for a variety of clinical data. We also illustrate the integration of vtk.js with WebXR, to demonstrate CVR in AR and VR environments.

Many similar CVR capabilities have been added to VTK for use in desktop and server-based C++ and Python applications. We have also shown how VTK’s CVR methods can be used from within Python to drive holographic displays.

The Technology of Cinematic Volume Rendering in vtk.js

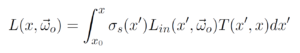

For the CVR methods in vtk.js, we used a simplified version of the volume rendering equation by omitting emission and background radiance terms and using discrete sampling to estimate the integral:

For details on this equation, see our publications at the MICCAI 2022 AE-CAI workshop and in Computer Methods in Biomechanics and Biomedical Engineering.

Literature sometimes draws parallels between CVR and multiple scatter. However, several of the vtk.js CVR techniques are less computationally demanding than multiple scatter, and yet they still bring out details that are not present in conventional volume rendering, such as surface gradient, depth, and occlusion. More specifically, we explore three features that are commonly used in CVR: gradient-based surface model, directional occlusion, and multiple scatter, plus some related techniques that do not fall into these standard categories.

The techniques added to vtk.js to support CVR include the following:

- A Bidirectional Reflectance Distribution Function (BDRF) and improved lighting models

- To lay the foundation for more advanced features, we added support for multiple types of lighting, including positional light source, head light, and camera light. Cone angle, attenuation and one-sided lighting are also implemented in vtk.js. With these additions, lighting and gradient shading modules in vtk.js match those that have been available in VTK. For BRDF reflectance, we use the Phong model to calculate the diffuse, specular and ambient lighting.

Figure 1: (left) direct volume rendering and (right) gradient shading

- Volumetric Shading

- On top of direct volume rendering, we applied lighting based on secondary contribution. At each sample point, a second ray branches off towards the light source, from which the global occlusion factor is calculated. The option to increase the sampling distance of the secondary rays is provided to further speed up rendering. Rather than traversing the entire ray, users can also specify the length of ray (GlobalIlluminationReach) they would like to consider, and its sample distance (VolumeShadowSamplingDistFactor). For more technical background on volumetric shading, please see this previous post on CVR in VTK.

Figure 2: (left) gradient shading and (right) volumetric shading with 50% shadow ray. Small features are dampened which can be good or bad, dependent on the noise in the data.

- Hybrid Shading

- Rather than separating gradient-based and volumetric shading, the CVR team at Kitware theorized that the hybrid of these two methods would allow us to balance an image’s local and global features. Gradient-based shadow reflects local details while volumetric shadow creates global ambient occlusion. Our hybrid model interpolates the two shading values based on the user-defined coefficient (VolumetricScatteringBlending), gradient magnitude and opacity value. This work has been submitted for publication.

Figure 3: (left) gradient shading and (right) hybrid with a setting to favor volumetric over gradient-based shading, which results in a softer appearance and reduces the effect of image noise, which, for example, can be useful when rendering ultrasound data.

- Local Ambient Occlusion

- Local ambient occlusion (LAO) is a local shading technique. Compared to global lighting, which considers the entire volume, LAO calculates light contributions from a set of neighboring voxels. At each sample point, it branches several rays towards random directions within a hemisphere and traces several voxels in each direction. The accumulated translucency is averaged to represent the amount of light occluded by those voxels.

- Users can define the number of ray directions (LAOKernelSize) and the number of voxels traced (LAOKernelRadius). When the number of rays equals 1, it is almost equivalent to the volumetric shading method with short ray length. However, LAO creates soft ambient shadow and avoids fully shadowed regions, which is often desired for medical visualization. In our implementation, LAO contribution is added to the ambient component of the BRDF model.

- Additional CVR options

- The above base algorithms can be applied with the local gradient computed using either the opacity or density normal. We have found opacity gradient computations to be particularly useful in medical images, where often tissues are made transparent to avoid occlusion, and correspondingly their contributions to the visualization computations needed to be minimized.

Evaluation

To help understand the strengths and weaknesses of these CVR options, we evaluated their performance on multiple hardware platforms using a variety of medical data:

- Large-size chest CT scan

- 512x512x321 – 214MB

- Features multiple opaque structures

- Medium-size fetus 3D ultrasound scan (from TomoVision.com)

- 368x368x234 – 161MB

- Features significant, structured noise

- Small-size head CT scan

- 256x256x94 – 15.6MB

- Features opaque and transparent structures

Figure 5: The three datasets used for benchmarks: (left) Head CT, (middle) Chest CT, (right) fetus ultrasound

Shown in Figure 6, using the three testing datasets, we benchmarked the speed by applying five different sets of parameters: (1) direct volume rendering with ray casting, (2) gradient based shading, (3) volumetric shading with full shadow ray, (4) volumetric shading with partial shadow ray, and (5) a mixture of gradient based shading with volumetric shading. Here “partial” means “10% of the secondary ray length is considered”.

Figure 6: Interactive render time for three datasets and rendering techniques. Interactive render time represents the average time of rendering one frame when the user rotates or pan over the volume.

Figure 7: First render time for three datasets and rendering techniques. First render time is the time span starting from creating the WebGL context to delivering the first rendered image.

It is worth noting that computationally demanding rendering, such as volumetric shadow with full shadow ray, did lead to test failures when the GPU was not powerful enough, as indicated by the missing entry in Figure 6. We also discovered that render time does not necessarily increase linearly with file size. Head CT data has much more translucent tissue (i.e., more voxels traversed by each ray) compared to the other two, thus it requires almost twice the render time for CVR techniques.

LAO was recently developed and therefore was not included in the speed test. Depending on the specific parameters, LAO could be either slower or faster than volumetric shading.

Vtk.js

In vtk.js, CVR related parameters are exposed in the VolumeMapper module. Figure 8 is a flowchart that shows which rendering method will be used. Gradient shading can be combined with either volumetric shading or LAO but not both.

Figure 8: Chart showing rendering pipeline for CVR module and available parameters

Picking a setting or technique that increases rendering speed most often will lead to a drop in rendering quality, which is manifested by either lower level of details and/or more speckles. When rendering a dataset, we suggest users experiment with different lighting settings, rendering techniques, and parameters in order to find the best combinations of rendering methods and conditions. The upper limit of rendering quality depends on many factors, such as file size, medical data type, sample distance, opacity transfer function, etc. It is always advisable to start with high-speed settings and then gradually refine rendering quality. For a preview of the volumetric shadow feature, please see VolumeMapperLightAndShadow example; and for local ambient occlusion, please see LAODemoHead example.

The type of data can also help guide our decision. For CT scans, gradient-related techniques are more often preferred than global shading alone. For ultrasound, choosing suitable color and opacity transfer functions is vital before adding any CVR features. Often, masking is also necessary to block out redundant volume or noise in ultrasound data. We also noticed that gradient-based methods often impose an overwhelmingly metallic feeling and make the ultrasound data look unrealistic.

Future work

This work provides a preliminary foundation for applying CVR methods and predicting rendering computation time tradeoffs. In our MICCAI 2022 AE-CAI workshop and Computer Methods in Biomechanics and Biomedical Engineering publications, we also conducted a user preference study using these images. That study provides additional insights regarding when to use which algorithm, albeit ultimately task-specific performance studies are needed rather than general preference studies. Also, as noted, LAO is a recent addition to this foundation, and it appears to offer good flexibility in quality -vs- time balancing, but it was not included in the timing or the preference studies. Additionally, we have tried several multiple scattering algorithms; however, in all cases, the computational time of those alternatives was significant larger, unless a very conservative number of iterations is used, which provided limited visual improvements, and therefore we did not integrate them with vtk.js. Trading off memory for speed using a deep shadow map, gradient texture, or other optimizations would significantly speed up the scattering process and might lead to acceptable rendering performance in combination with level-of-detail rendering heuristics.

For additional insights and to conduct your own explorations and evaluations, see

- VolView 4.0 release announcement

- VolView Website and live VolView application

- Blog on Cinematic Volume Rendering in VTK and ParaView

- https://kitware.github.io/vtk-js/api/Rendering_Core_VolumeMapper.html

- https://kitware.github.io/vtk-js/examples/VolumeMapperLightAndShadow.html

- https://kitwaremedical.github.io/vtk.js-render-tests/LAODemoHead.html

The majority of this work was performed by Jiayi Xu, during her summer internship at Kitware. Collaborators included Gaspard Thevenon, Timothee Chabat, Forrest Li, Tom Birdsong, Ken Martin, Brianna Major, Matt McCormick, and Stephen Aylward.

This work was funded, in part, by the NIH via NIBIB and NIGMS R01EB021396, NIBIB R01EB014955, NCI R01CA220681, and NINDS R42NS086295