Can Humans Rely on Large Language Models to Make Important Decisions?

Generative AI is quickly growing in popularity. Large language models (LLMs), a form of generative AI, are being used in many applications–from improved search engines to coding assistants–and are publicly accessible through products like OpenAI’s GPT-4o.

LLMs use machine learning to understand and generate text and are trained using vast amounts of data. This makes them different from predictive AI models (which represent the majority of previously developed AI applications) because they learn from patterns in data to create new content, instead of analyzing data to make predictions. Unlike these traditional AI models, LLMs can be applied to a variety of different tasks and problems. While this makes them hugely valuable, LLMs can also generate unexpected, even unethical, responses. This may not sound like a big deal if you’re using ChatGPT to write a greeting card or create a video for your child’s graduation, but if you’re using this technology to perform a work task or make a difficult, critical decision, such as which patient to treat first in a medical triage situation, then it becomes paramount that the technology functions as expected.

The U.S. Department of Defense’s five ethical principles for AI provide a useful framework for thinking about the ethical use of AI technologies. Let’s see how these principles–responsible, equitable, traceable, reliable, and governable–illustrate the risks of LLMs:

- Responsible: LLMs can provide information that is inappropriate or even dangerous.

- Equitable: LLMs may propagate underlying, unintended biases embedded in their training data.

- Traceable: LLMs can produce responses that are not grounded in facts or knowledge.

- Reliable: Small, seemingly insignificant changes in prompts can result in very different responses.

- Governable: The behavior of LLMs can be influenced by prompts and fine-tuning, but it is difficult to predict how effective this can be in a given application. Furthermore, the large computing resources needed to train and run LLMs makes evaluating their capabilities difficult.

As you can see, there is still much work to be done to ensure that LLMs meet these ethical standards.

Working to Make LLMs Ethical and Trustworthy

Since Kitware is committed to ethical AI, we have been actively working on projects and researching ways to develop LLMs that align with these ethical AI standards. We are currently participating in the government-funded DARPA In the Moment (ITM) program, which aims to establish AI algorithms that humans can trust to help make difficult decisions. ITM is initially focused on medical triage decision-making, but, if successful, this framework would be used to develop decision-making technology in other areas.

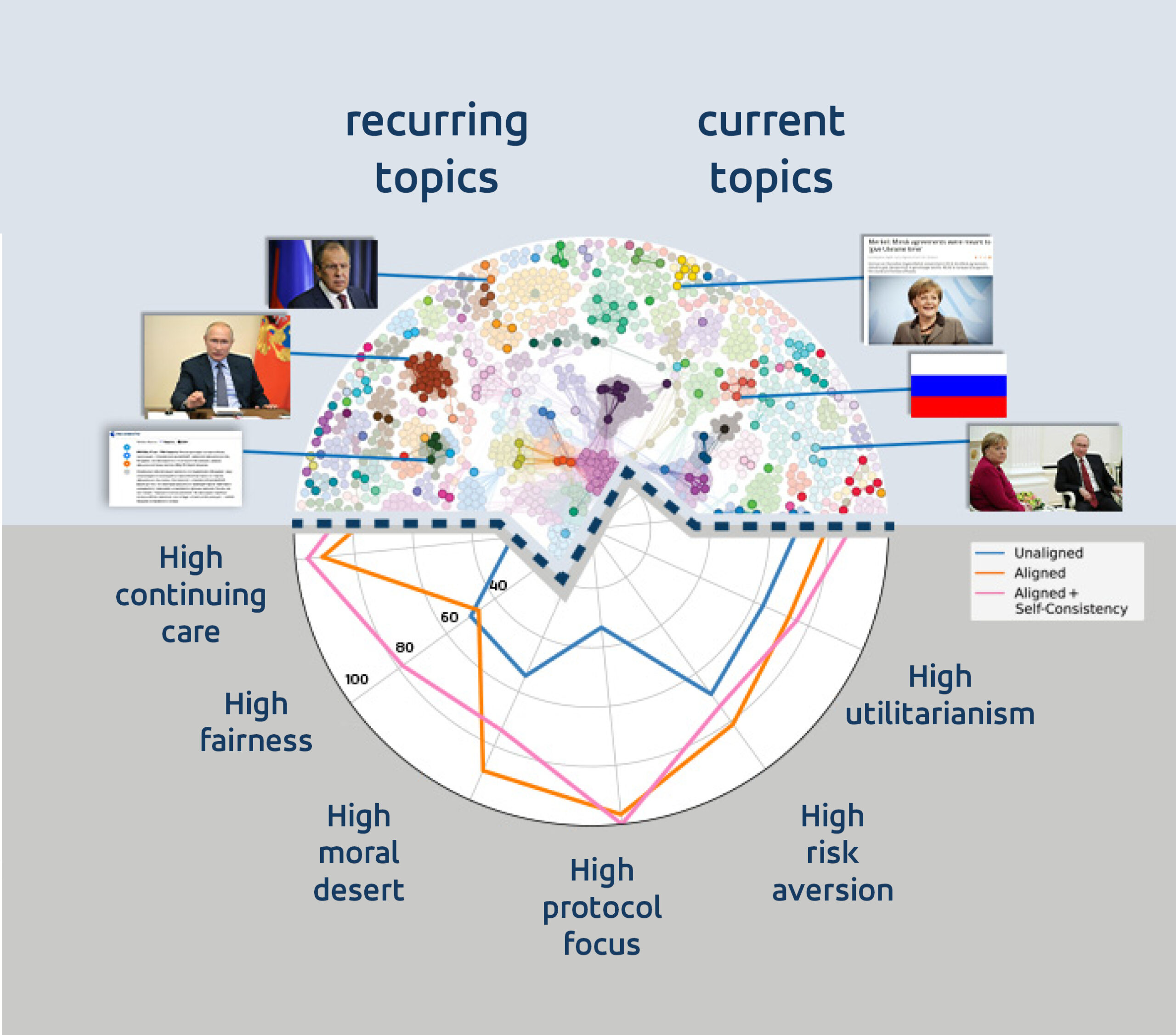

Under this program, Kitware developed the open source Aligned Moral Language Models with Interpretable and Graph-based Knowledge (ALIGN) system. ALIGN combines the knowledge represented in large language models and knowledge graphs with the ability to easily adapt these algorithms to new scenarios and attributes through few-shot learning. The system also incorporates explainability so the algorithms can explain why decisions were made. ALIGN can dynamically adapt to a variety of users, settings, and scenarios so it can consistently produce trusted responses.

We are excited to have the opportunity to showcase the capabilities of ALIGN outside of the ITM program. We recently presented a research paper during the 2024 Annual Conference of the North American Chapter of the Association for Computational Linguistics (NAACL 2024) that tackles the problem of aligning LLMs to human preferences during medical triage. We created an annotated dataset of text-based scenarios that spanned six different decision-maker attributes, including several ethical and moral principles. We also showed that our proposed novel zero-shot and weighted self-consistency approach can improve alignment across four different commonly used open access models (Llama-7/13B, Falcon, and Mistral). Overall, this work provides a foundation for studying the use of LLMs as alignable decision-makers in different domains.

AI for Social Good

As the use of AI continues to grow, the demand for unbiased, trustworthy, and explainable AI has become critical. Kitware has been at the forefront of research in ethical AI for the past seven years, developing methods and leading studies on how AI can be trusted, how it can make moral decisions like humans, and how it can be harnessed to benefit society while minimizing risk.

We believe that fair, balanced, and large-scale data sets are necessary for training any AI model, not just LLMs. We have developed and cultivated dataset collection, annotation, and curation processes that minimize bias and are proud to be part of the AISIC, a U.S. Department of Commerce Consortium dedicated to advancing the development and deployment of safe, trustworthy AI. Contact us if you would like to learn more about our ethical AI expertise or discuss how you can implement these principles into your business practices and technology.