3D point cloud object detection in LidarView

More than a year ago, we presented an example of integrating point cloud deep learning frameworks inside LidarView. Here is more information on that feature as well as the latest advancements, including a full new installation guide for anyone who wants to power AI within LidarView.

In this article, we used an internal development version of LidarView (soon to be released) based on the latest ParaView 5.11 on Linux.

We are still testing this feature on Windows, so stay tuned!

Using one of the latest NVIDIA graphic cards for faster inference is also a real plus.

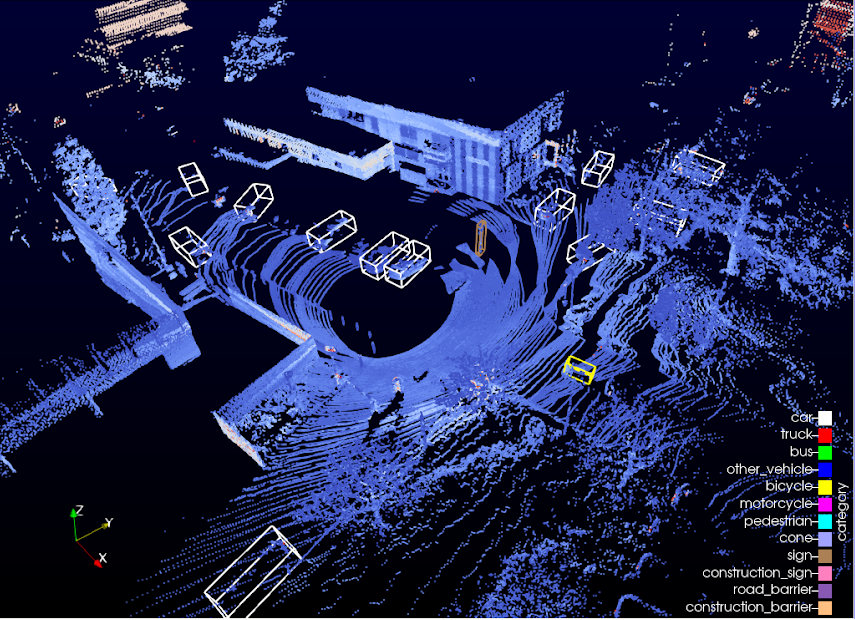

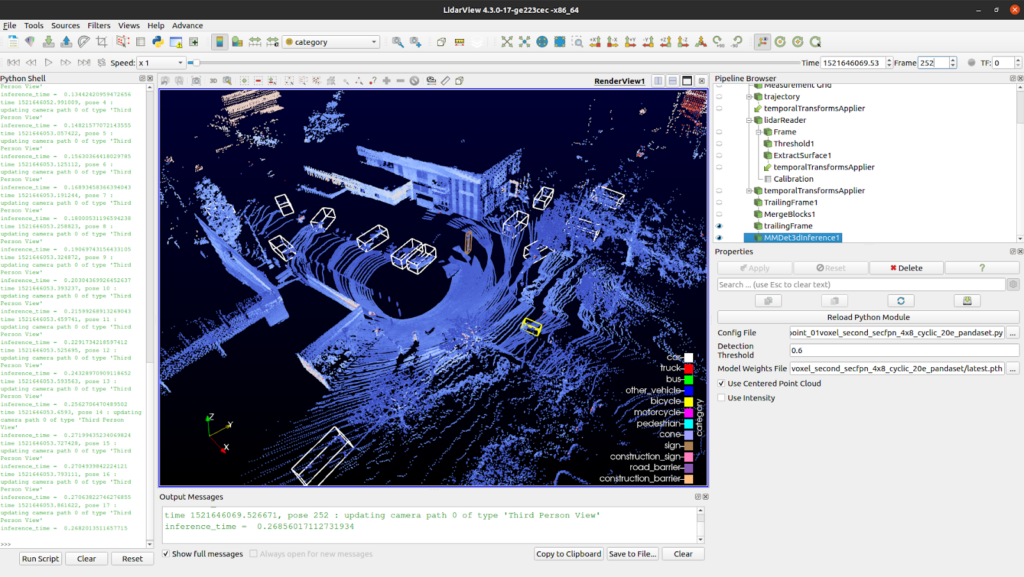

Example of the AI plugin inside LidarView

A primer on our 3D point cloud object detection

Deep learning is today’s de facto choice for anyone who wants to power artificial intelligence inside their application. In standard object detection, we can divide two components:

- the backbone, which extracts important information from the raw data

- the head, in charge of the detection itself (bounding boxes)

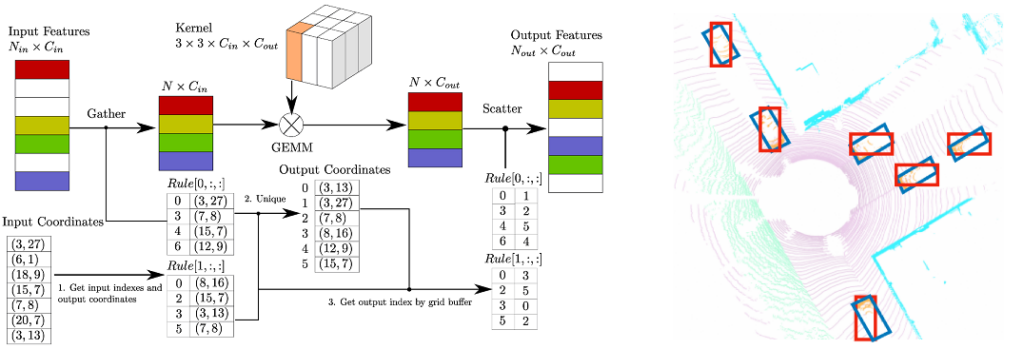

We decided to go with a combination of two state-of-the-art models namely Second as a backbone model, and CenterPoint for the detection head. The sparsity of 3D point clouds is challenging, and that is where Second’s ability to voxelize input space and using sparse operations on this space is important for us. At the end of the data processing pipeline, CenterPoint allows oriented bounding boxes to rotated objects.

Sparse convolution algorithm used in Second (left). Difference between standard anchor-based (highlighted in red) and center-based (highlighted in blue) (right).

One of the most important aspects when training machine-learning algorithms is access to diverse and large datasets, such as the KITTI Vision Benchmark Suite for autonomous vehicles. Originally, the proposed model was trained on nuScenes. We decided to re-train it using pandaset which provides a better resolution (from 32 to 64 beams), hence a more accurate representation of the objects. The pandaset dataset also has a permissive license compared to nuScenes.

Installation guide

Fortunately for us, MMDetection3D (developed and maintained by openMMLab) is a library under an Apache License 2.0 that already implemented the two aforementioned models.

The toolbox installation can be complicated, as it relies on Python hardware-dependent libraries like PyTorch. This is why we are sharing with the community a complete installation guide that can be used to install all components from scratch and insert them inside LidarView.

One of the important additions is the usage of venv paraview support to bind locally installed PyTorch inside LidarView.

Running the plugin

After successful installation, you should be able to run the detection pipeline using a standalone Python script. In order to allow testing on different Lidar resolutions (and in particular smaller than the training dataset), it uses trajectory as input to accumulate several past frames and register them to the current coordinate system. This trajectory can be obtained using our SLAM algorithm, or even external poses, such as ones obtained using INS systems. We originally tuned this to work with Velodyne Puck Lidar in an urban environment, but you may want to reduce the amount of accumulated (trailing) frames if you are using a lidar with a higher resolution.

This script is based on a configuration file, where the important parameters to populate are:

- categories_config_filename: the .yaml file to define categories and color for detected objects, it is available within the repo,

- trajectory_filename: a .vtp file corresponding to the trajectory of the Lidar (use the LidarSlam as a pre-processing step to estimate it),

- lidar_filename: path to the .pcap file that has the lidar data (note we haven’t tested it out, but this should also work during live operations on a Lidar Stream on a powerful enough device),

- calibration_filename: for this .xml file, you can use the default calibration file installed with your lidarview in lvsb/build/install/share/,

- output_directory: the output directory path,

- config_file: the .py file with the MMDetection3D configuration file (see MMDetection3d documentation),

- weights_file: the .pth file with the weights of a trained model

Before running the script, you will need to load it inside LidarView.

- if not active yet, activate the advanced mode in order to be able to load the plugin using by ticking Advance Features in the Help menu,

- load the mmdetection3d_inference python plugin using the Advance > Tools (paraview) > Manage Plugins… menu,

- if you want the plugin to be automatically loaded when opening LidarView, tick the Autoload box.

Once installed, first make sure that the plugin is loaded (if Autoload was not ticked). Now you can click on the Run Script button in the bottom left and select mmdetection3d_inference_script_example.py.

We will also provide a guide to launch Python script in a standalone mode (outside of LidarView, in your Python shell) so stay tuned for more!

Note that this guide can be used to enable any other Python tool from your system inside LidarView, so make sure to follow our blog for more examples and let us know what processing you would like to introduce, and experiments you carry on yourself with that

Results

Here are two examples running the provided script. The first one shows an autonomous car with a Velodyne PUCK (VLP-16) going around the La Doua neighborhood (Lyon, France).

This other example shows data from an Hesai PandarXT lidar, in La Cité Internationale, this time by foot.

So What’s next?

Now that we showcased how to embed a PyTorch environment in LidarView to apply deep learning algorithms on point clouds directly within the UI, and enabled opening still point clouds (using PDAL library) on top of live or recorded sensor data, LidarView capabilities are widening up!

We may add tracking algorithms on top of our detection pipeline (as we already did outside of LidarView), and apply more machine learning algorithms on various fields. What about Implicit Scene Completion, View synthesis leveraging NERF techniques, automatic tree detection, detecting urban change from aerial/terrestrial point cloud ?

Contact us to learn more about customizing LidarView to address your needs.